Zhiyang (Frank) Dou

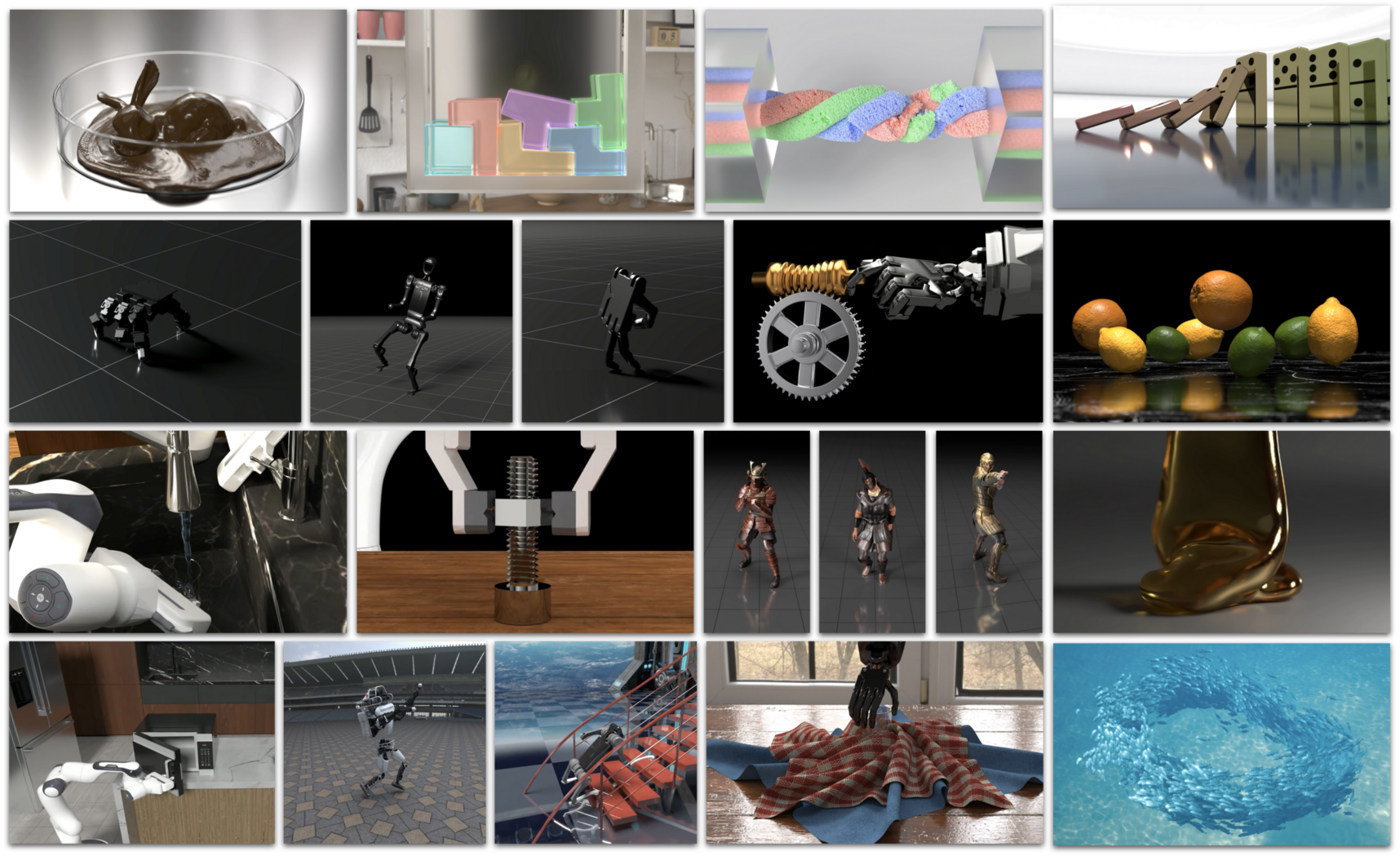

Research Interests (Visualization): Simulation (Physics-based & Cognitive/Psychological); Robotics; Neurosymbolic AI; Computer Graphics (Character Animation; Geometric Computing); Human Behavior Analysis (Capture; Modeling & Simulation).

For students interested in working with me at our group, please refer to the information here.News

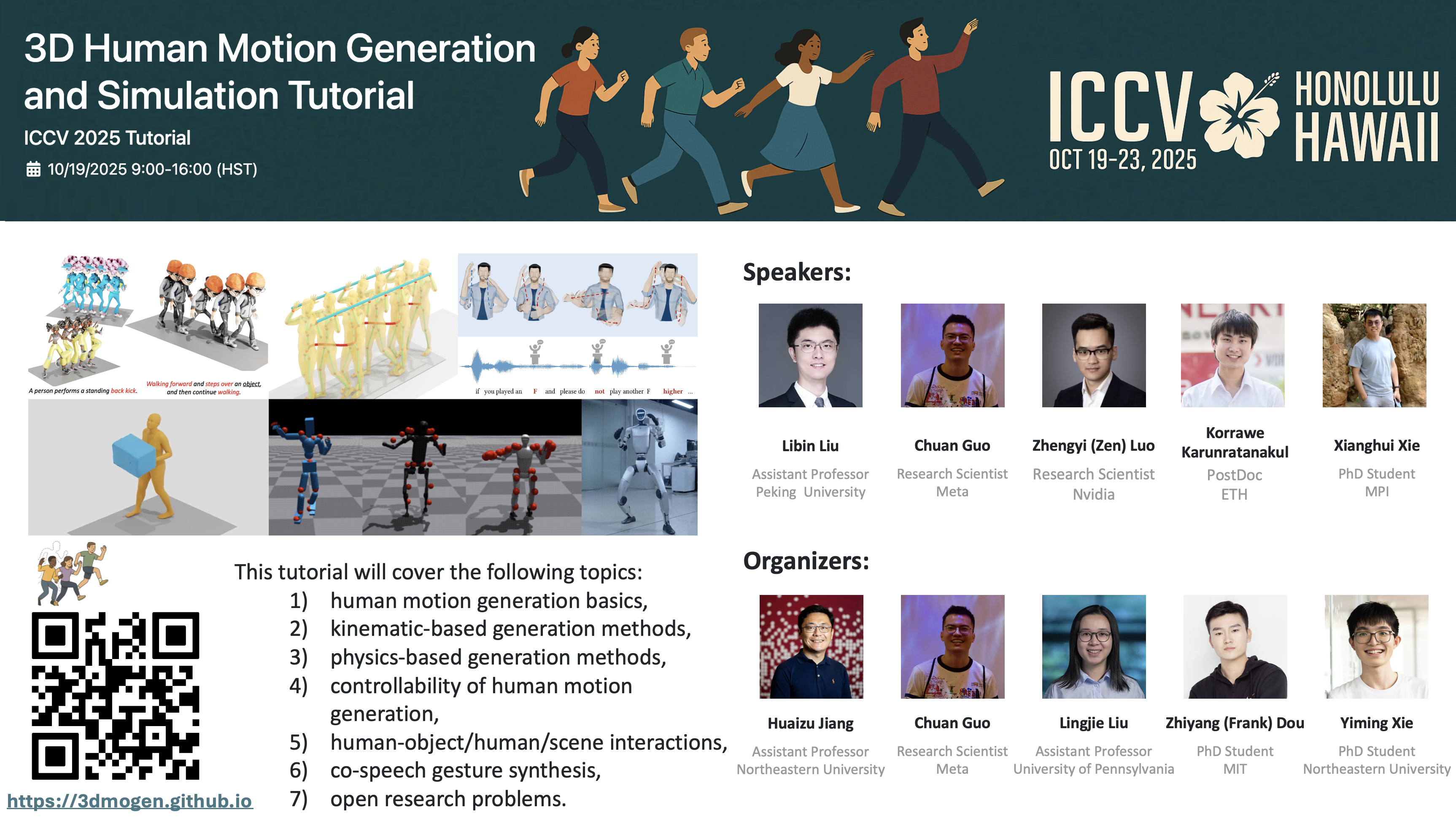

- Jun. 2025:We are holding tutorial (on 3D Human Motion Generation and Simulation) at ICCV 2025.

- Mar. 2025:My research statement was accepted to EG 2025 Doctoral Consortium.

- Feb. 2025:I was selected as a Meshy Fellowship Finalist—thanks, Meshy!

- Aug. 2024:My research statement was accepted to ECCV 2024 Doctoral Consortium.

- Jul. 2024:Coverage Axis (EG22) was recognized as a Top Cited Article in CGF for 2022–2023.

- Mar. 2023:GCNO was accepted to SIGGRAPH 2023. We got SIGGRAPH 2023 The Best Paper Award.

Selected Research Works

* Equal Contributions; † Corresponding Authors; cs: coming soon.

- project page (cs)

- paper (cs)

- code (cs)

-

abstract

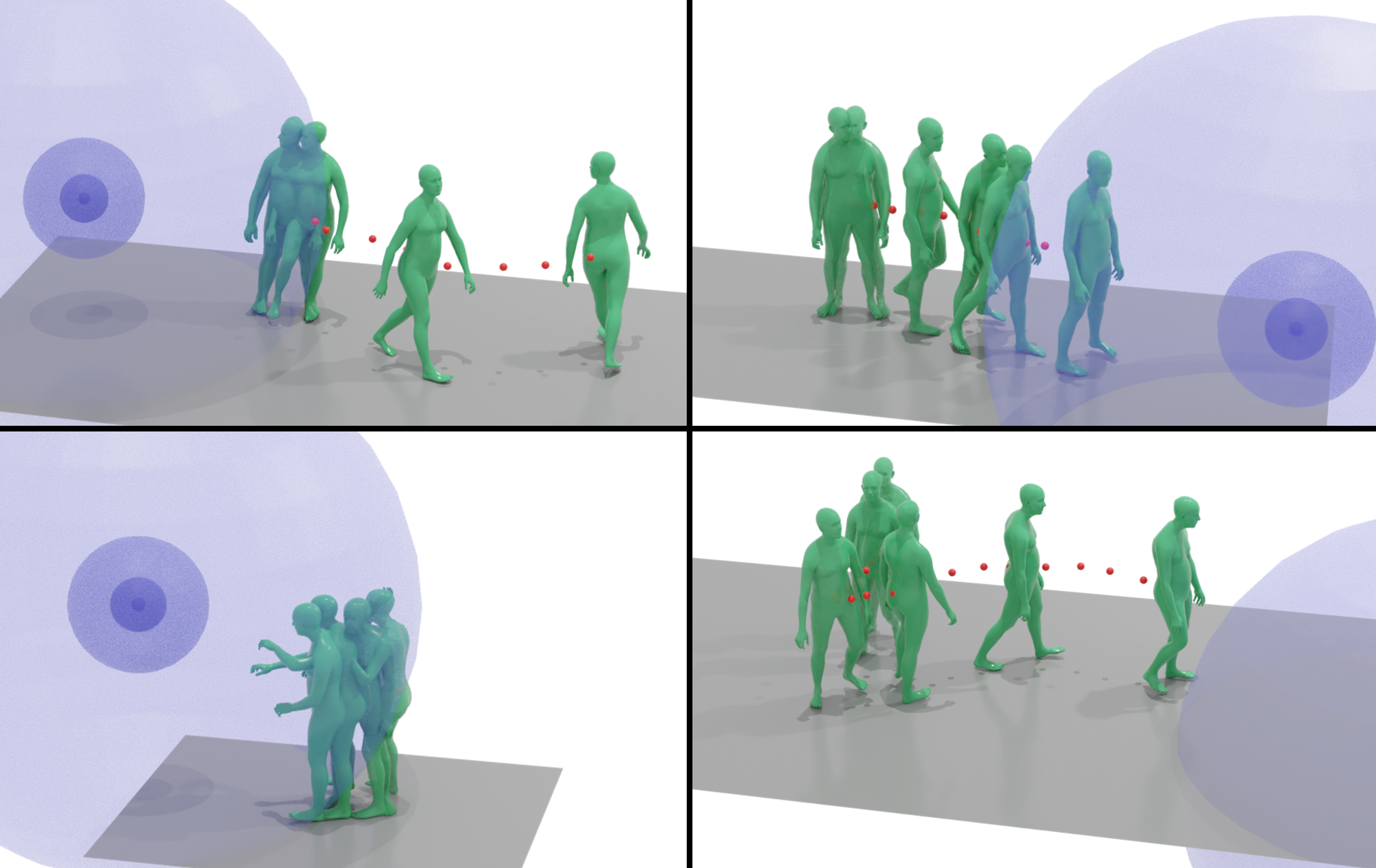

Humans could naturally respond to both acoustic cues and semantic intent: spatial audio provides continuous, fine-grained spatiotemporal trajectories, whereas text anchors high-level semantics in discrete units. In this paper, we study the novel task of human motion synthesis jointly conditioned on spatial audio and natural language, a problem that has been largely overlooked in previous research. We introduce STAM, a dataset of motion sequences paired with spatial audio and detailed textual annotations whose rich vocabulary affords precise and nuanced specification of human motions. We present MoSAT, the first baseline to fuse spatial audio and text for fine-grained multimodal control of human motion synthesis. We employ a Motion RVQ-VAE to encode motions into a compact, expressive latent space, and a Spatial Audio VAE that efficiently models spatial audio characteristics. On top of these, an autoregressive transformer predicts human motion from coordinated spatial-audio and text embeddings, followed by residual refinement. Such a hierarchical design enhances temporally coherent and semantically aligned motion sequences. We also develop tri-modal evaluators for comprehensive evaluation on this new task. Extensive experiments show that MoSAT achieves the SOTA performance by leveraging spatial audio’s intrinsic motion-shaping properties alongside textual semantics, enabling precise and diverse motion in various scenarios. Our code and data will be released upon acceptance.

- project page

- paper

- code (cs)

-

abstract

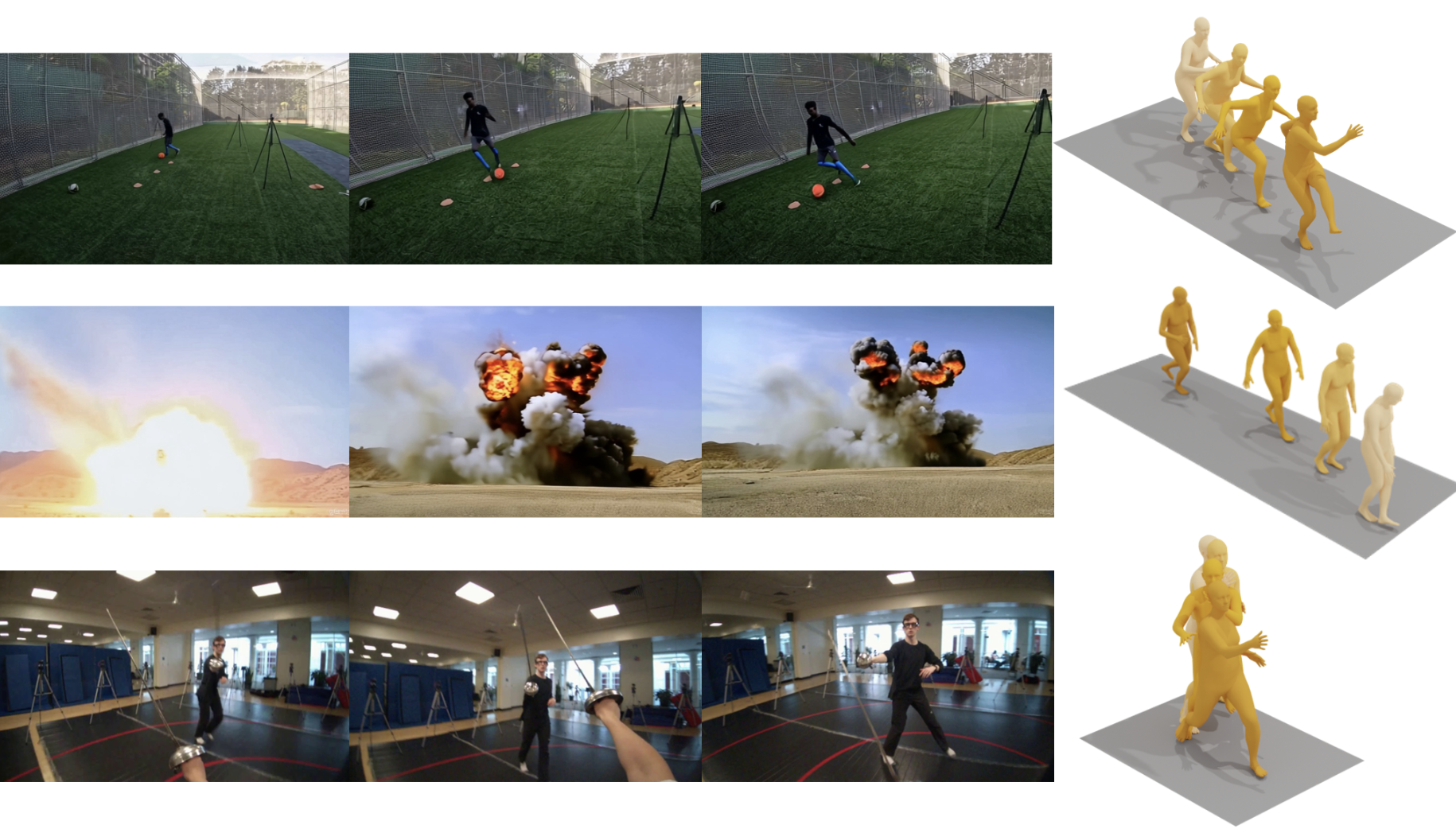

Humans exhibit adaptive, context-sensitive responses to egocentric visual input. However, faithfully modeling such reactions from egocentric video remains challenging due to the dual requirements of strictly causal generation and precise 3D spatial alignment. To tackle this problem, we first construct the Human Reaction Dataset (HRD) to address data scarcity and misalignment by building a spatially aligned egocentric video–reaction dataset, as existing datasets (e.g., ViMo) suffer from significant spatial inconsistency between the egocentric video and reaction motion, e.g., dynamically moving motions are always paired with fixed-camera videos. Leveraging HRD, we present EgoReAct, the first autoregressive framework that generates 3D-aligned human reaction motions from egocentric video streams in real-time. We first compress the reaction motion into a compact yet expressive latent space via a Vector Quantised-Variational AutoEncoder and then train a Generative Pre-trained Transformer for reaction generation from the visual input. EgoReAct incorporates 3D dynamic features, i.e., metric depth, and head dynamics during the generation, which effectively enhance spatial grounding. Extensive experiments demonstrate that EgoReAct achieves remarkably higher realism, spatial consistency, and generation efficiency compared with prior methods, while maintaining strict causality during generation. We will release code, models, and data upon acceptance.

- project page (cs)

- paper

- code (cs)

-

abstract

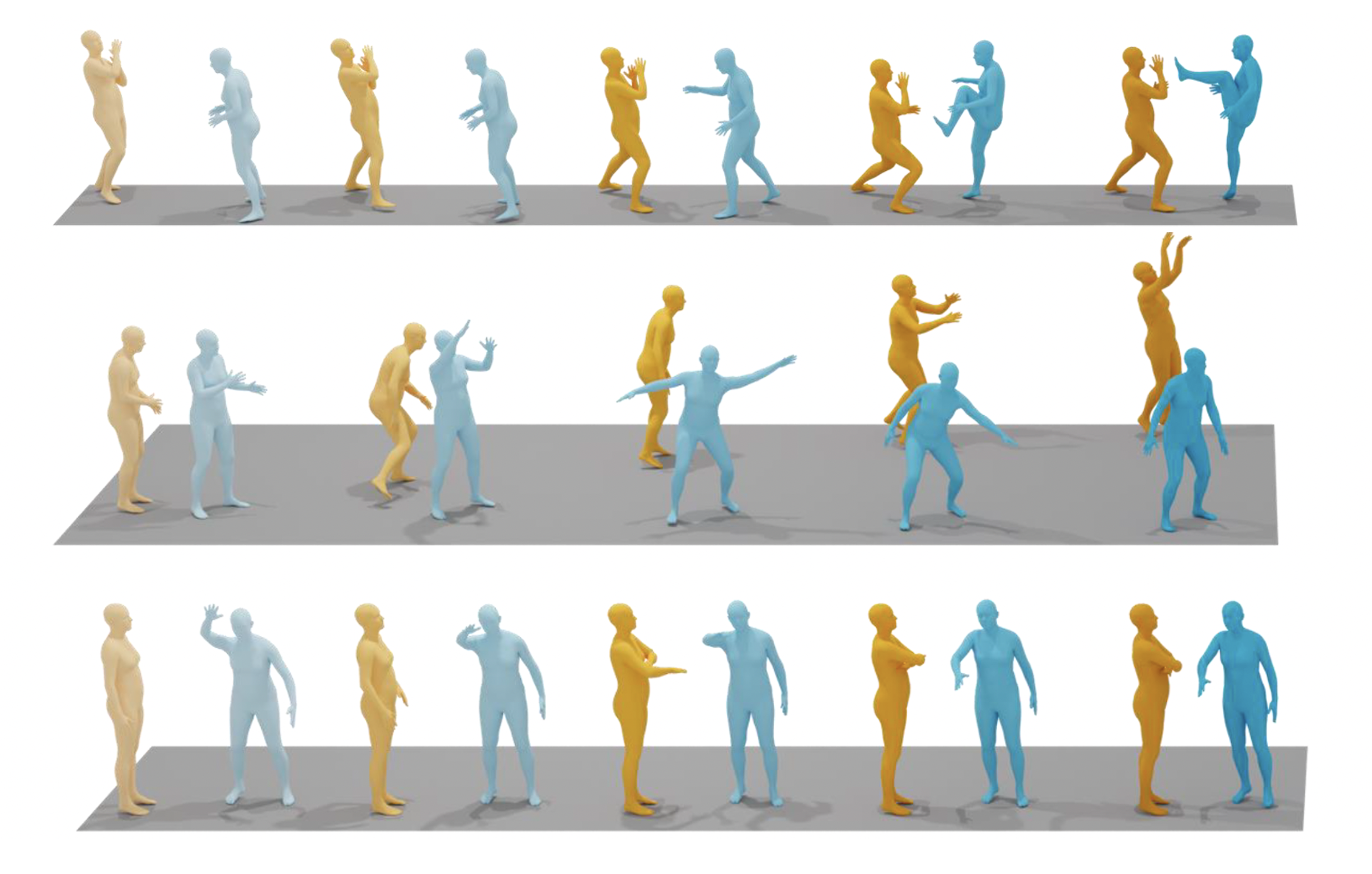

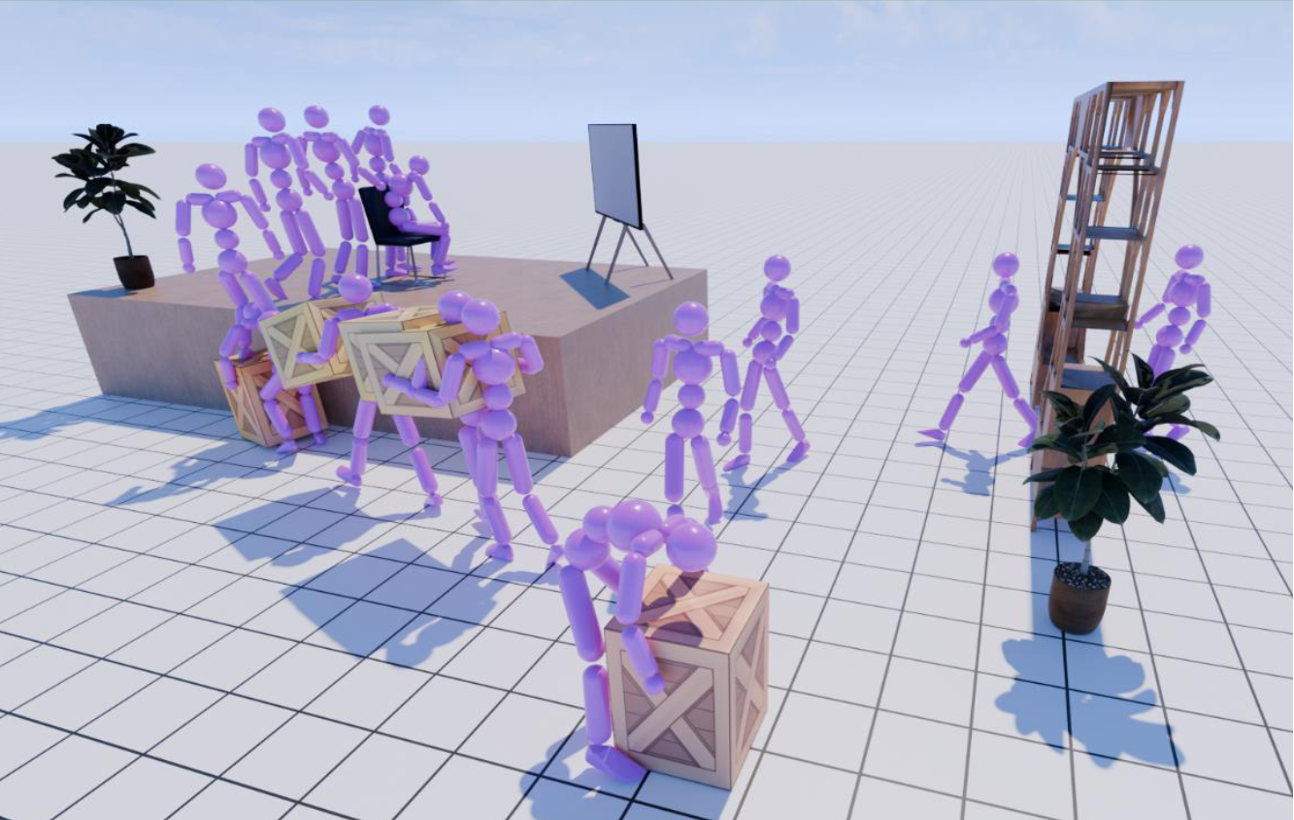

Modeling human-human interactions from text remains challenging because it requires not only realistic individual dynamics but also precise, text-consistent spatiotemporal coupling between agents. Currently, progress is hindered by 1) limited two-person training data, inadequate to capture the diverse intricacies of two-person interactions; and 2) insufficiently fine-grained text-to-interaction modeling, where language conditioning collapses rich, structured prompts into a single sentence embedding. To address these limitations, we propose our Text2Interact framework, designed to generate realistic, text-aligned human-human interactions through a scalable high-fidelity interaction data synthesizer and an effective spatiotemporal coordination pipeline. First, we present InterCompose, a scalable synthesis-by-composition pipeline that aligns LLM-generated interaction descriptions with strong single-person motion priors. Given a prompt and a motion for an agent, InterCompose retrieves candidate single-person motions, trains a conditional reaction generator for another agent, and uses a neural motion evaluator to filter weak or misaligned samples-expanding interaction coverage without extra capture. Second, we propose InterActor, a text-to-interaction model with word-level conditioning that preserves token-level cues (initiation, response, contact ordering) and an adaptive interaction loss that emphasizes contextually relevant inter-person joint pairs, improving coupling and physical plausibility for fine-grained interaction modeling. Extensive experiments show consistent gains in motion diversity, fidelity, and generalization, including out-of-distribution scenarios and user studies. We will release code and models to facilitate reproducibility.

- project page

- paper

- code

-

abstract

Enabling virtual humans to dynamically and realistically respond to diverse auditory stimuli remains a key challenge in character animation, demanding the integration of perceptual modeling and motion synthesis. Despite its significance, this task remains largely unexplored. Most previous works have primarily focused on mapping modalities such as language, audio, and music to human motion generation. As of yet, these models typically overlook the impact of spatial features encoded in spatial audio signals on human motion. To bridge this gap and enable high-quality modeling of human movements in response to spatial audio, we introduce the Spatial Audio-Driven Human Motion (SAM) dataset, which contains diverse and high-quality spatial audio and motion data. Furthermore, we develop a diffusion-based generative framework named MOSPA to capture the relationship between body motion and spatial audio with an effective fusion mechanism. After training, MOSPA could generate diverse realistic human motions conditioned on varying spatial audio inputs. We conducted extensive experiments to validate our method, which achieves state-of-the-art performance on this task. Our model and dataset will be open-sourced upon acceptance. Refer to our supplementary video for more details.

- project page (cs)

- paper (cs)

-

abstract

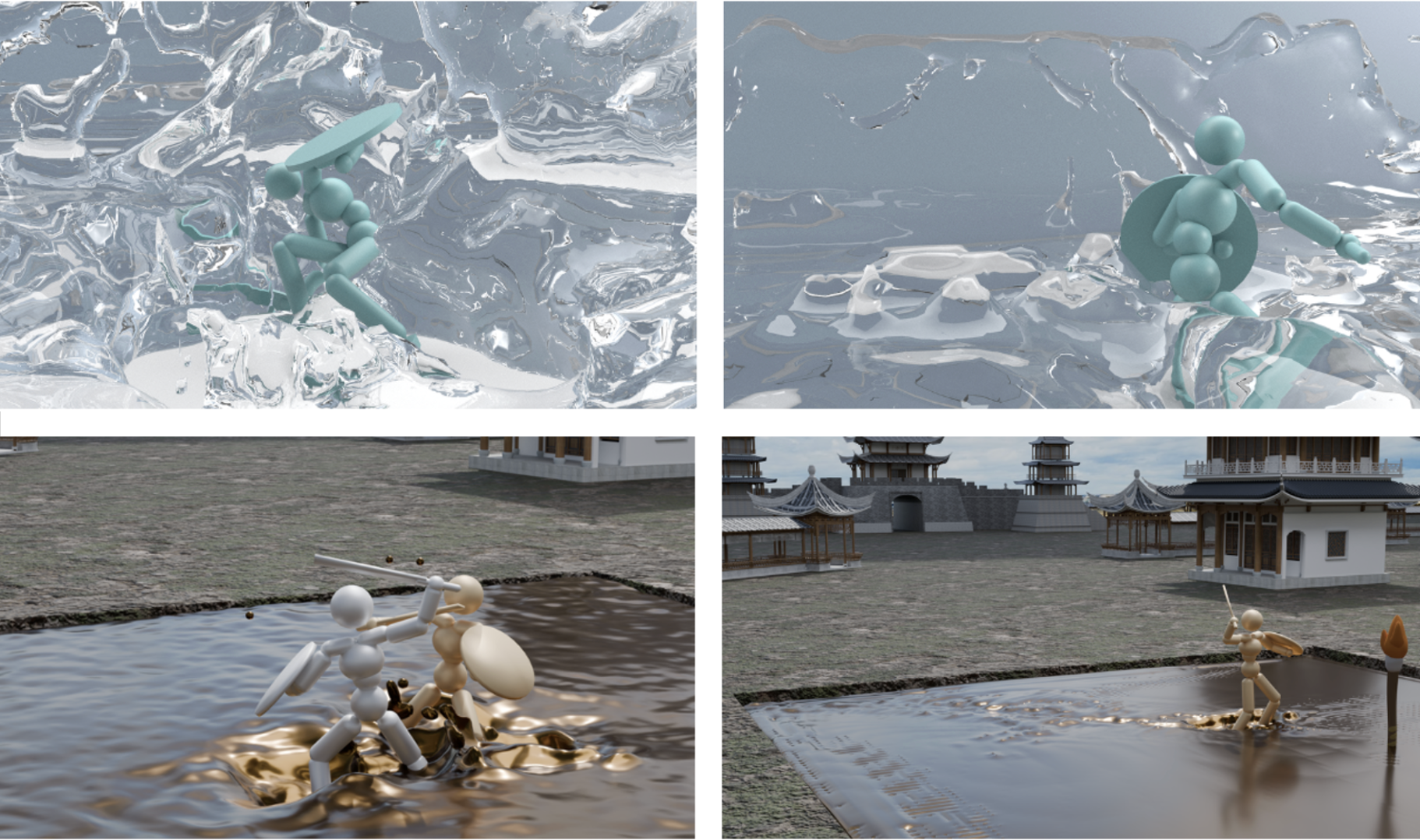

Humans possess the ability to master a wide range of motor skills, using which they can quickly and flexibly adapt to the surrounding environment. Despite recent progress in replicating such versatile human motor skills, existing research often oversimplifies or inadequately captures the complex interplay between human body movements and highly dynamic environments, such as interactions with fluids. In this paper, we present a world model for Character-Fluid Coupling (CFC) for simulating human-fluid interactions via two-way coupling. We introduce a two-level world model which consists of a Physics-Informed Neural Network (PINN)-based model for fluid dynamics and a rigid body world model capturing body dynamics under various external forces. This hierarchical world model adeptly predicts the dynamics of fluid and its influence on rigid bodies, sidestepping the computational burden of fluid simulation and providing policy gradients for efficient policy training. Once trained, our system can control characters to complete high-level tasks while adaptively responding to environmental changes. We also present that the fluid initiates emergent behaviors of the characters, enhancing motion diversity and interactivity. Extensive experiments underscore the effectiveness of CFC, demonstrating its ability to produce high-quality, realistic human-fluid interaction animations.

- project page (cs)

- paper (cs)

-

abstract

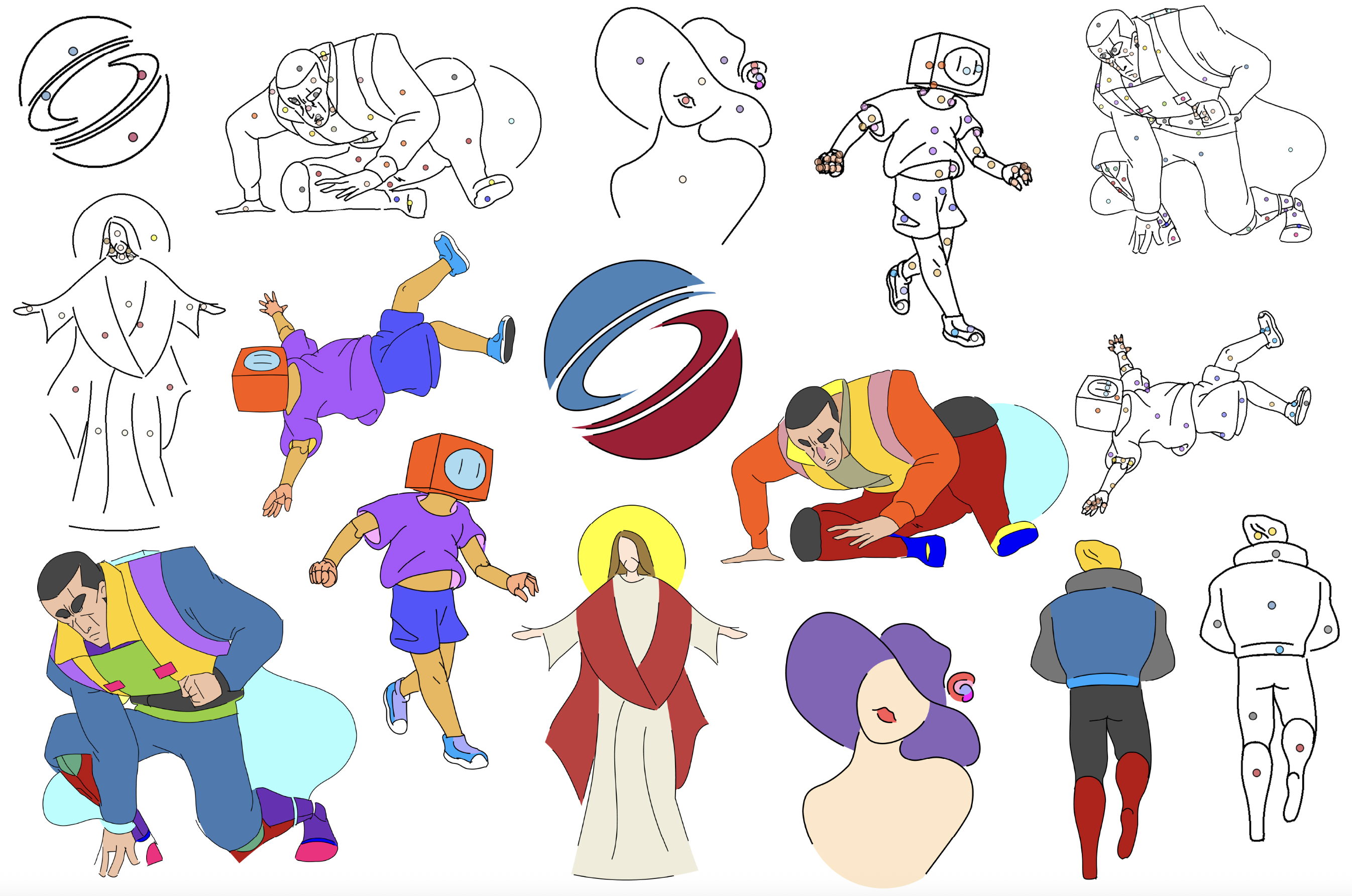

Hand-drawn vector sketches often contain implied lines, imprecise intersections, and unintended gaps, making it challenging to identify closed regions for colorization. These challenges become more pronounced as the number of strokes increases. In this paper, we present KISSColor, a novel method for inferring users' intended closed regions. Specifically, we propose intuitive stroke stretching by extending open strokes along tangent isolines of winding number fields, which provably form geometrically aligned closed regions. Extending all open strokes can lead to overly fragmented regions due to redundant intersections. While a Mixed Integer Programming (MIP) formulation helps reduce redundancy, it is computationally expensive. To improve efficiency, we introduce kinetic stroke stretching, which grows all strokes simultaneously and prioritizes early intersections using a kinetic data structure. This approach preserves stylistic ambiguity for lines requiring long extensions. Based on the growth results, redundant regions are suppressed to minimize fragmentation. We conduct extensive experiments demonstrating the effectiveness of KISSColor, which generates more intuitive partitions, especially for imprecise sketches. Our code and data will be released upon publication.

- project page

- paper

-

abstract

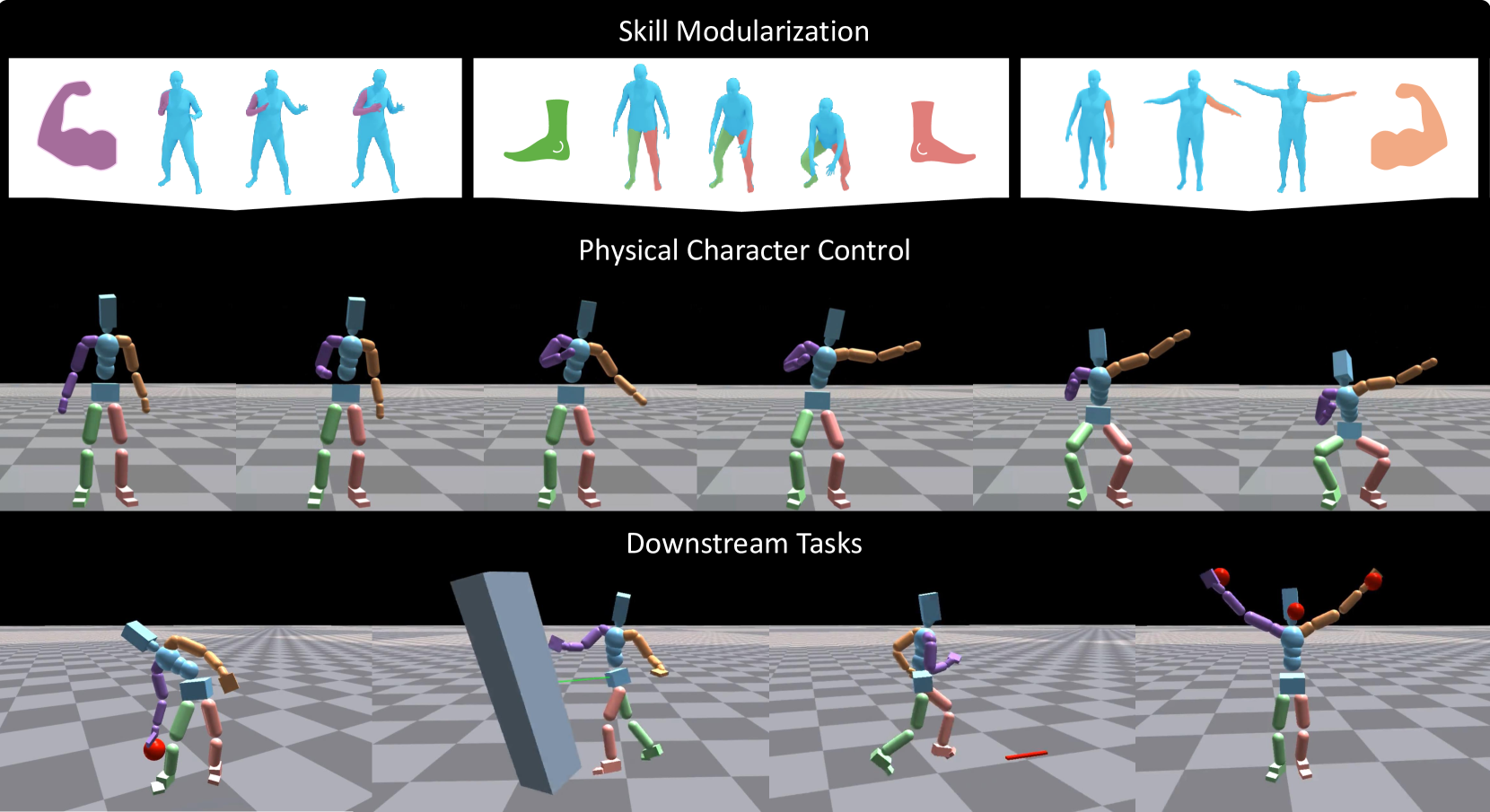

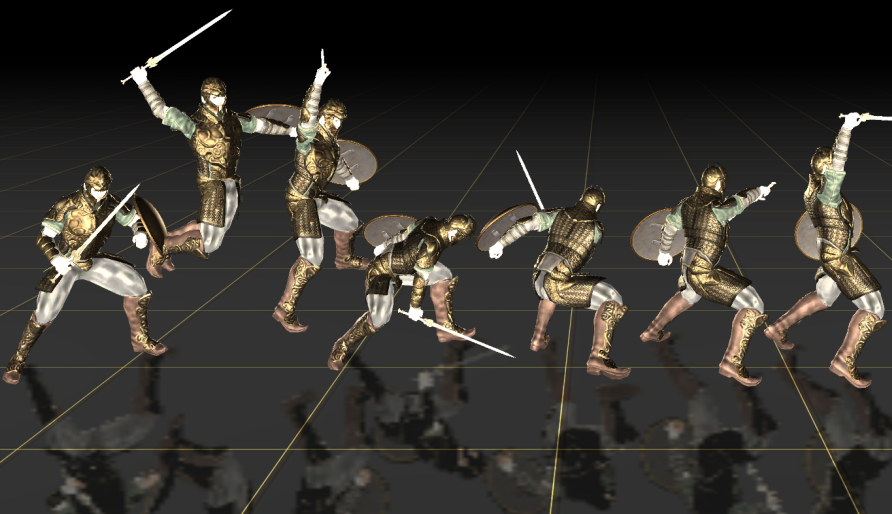

Human motion is highly diverse and dynamic, posing challenges for imitation learning algorithms that aim to generalize motor skills for controlling simulated characters. Previous methods typically rely on a universal full-body controller for tracking reference motion (tracking-based model) or a unified full-body skill embedding space (skill embedding). However, these approaches often struggle to generalize and scale to larger motion datasets. In this work, we introduce a novel skill learning framework, ModSkill, that decouples complex full-body skills into compositional, modular skills for independent body parts. Our framework features a skill modularization attention layer that processes policy observations into modular skill embeddings that guide low-level controllers for each body part. We also propose an Active Skill Learning approach with Generative Adaptive Sampling, using large motion generation models to adaptively enhance policy learning in challenging tracking scenarios. Our results show that this modularized skill learning framework, enhanced by generative sampling, outperforms existing methods in precise full-body motion tracking and enables reusable skill embeddings for diverse goal-driven tasks.

- project page

- paper

- code

-

abstract

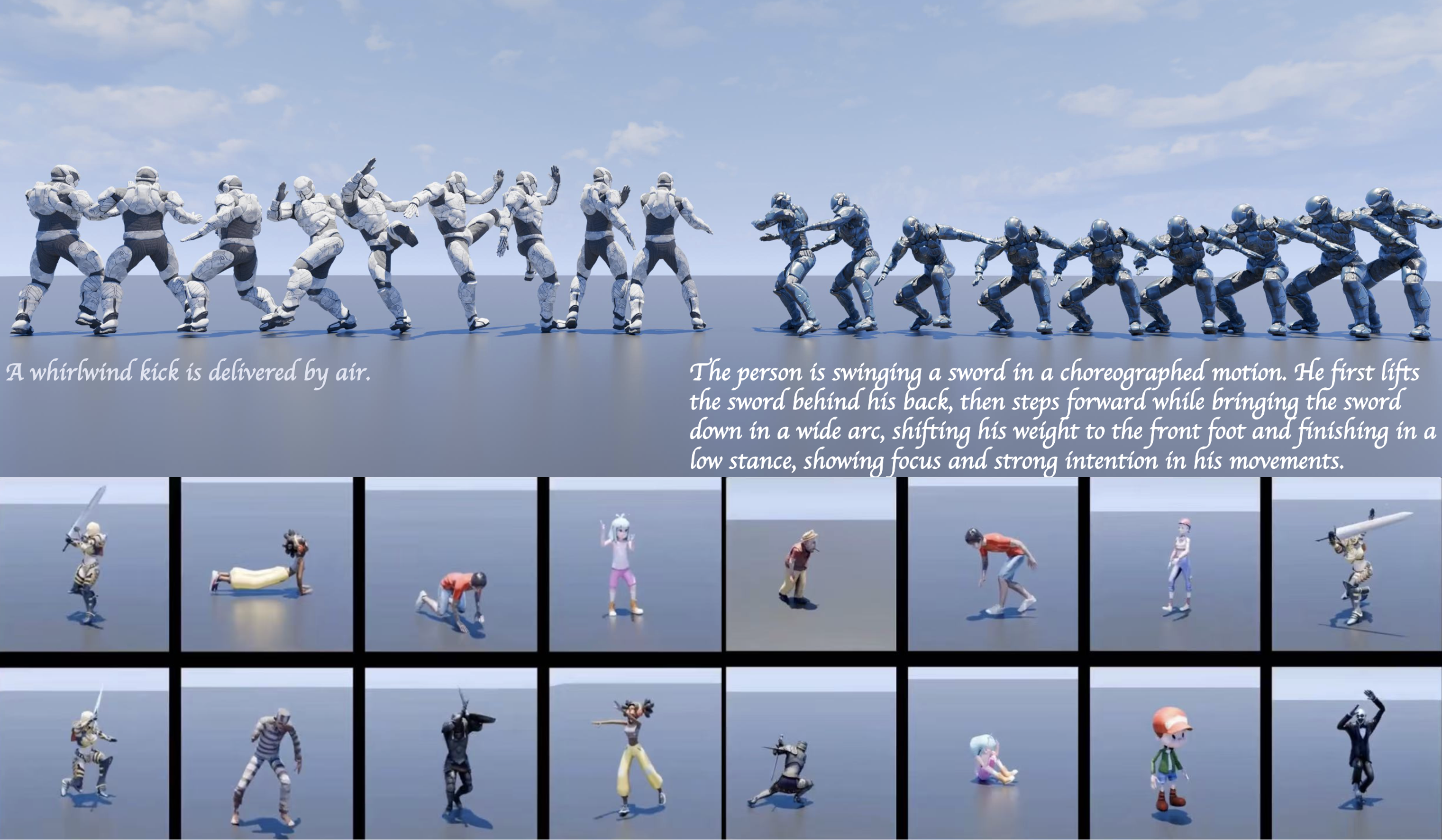

Generating diverse and natural human motion sequences based on textual descriptions constitutes a fundamental and challenging research area within the domains of computer vision, graphics, and robotics. Despite significant advancements in this field, current methodologies often face challenges regarding zero-shot generalization capabilities, largely attributable to the limited size of training datasets. Moreover, the lack of a comprehensive evaluation framework impedes the advancement of this task by failing to identify directions for improvement. In this work, we aim to push text-to-motion into a new era, that is, to achieve the generalization ability of zero-shot. To this end, firstly, we develop an efficient annotation pipeline and introduce MotionMillion-the largest human motion dataset to date, featuring over 2,000 hours and 2 million high-quality motion sequences. Additionally, we propose MotionMillion-Eval, the most comprehensive benchmark for evaluating zero-shot motion generation. Leveraging a scalable architecture, we scale our model to 7B parameters and validate its performance on MotionMillion-Eval. Our results demonstrate strong generalization to out-of-domain and complex compositional motions, marking a significant step toward zero-shot human motion generation. The code is available at this https URL.

- project page

- paper

- code

-

abstract

Synthesizing diverse and physically plausible Human-Scene Interactions (HSI) is pivotal for both computer animation and embodied AI. Despite encouraging progress, current methods mainly focus on developing separate controllers, each specialized for a specific interaction task. This significantly hinders the ability to tackle a wide variety of challenging HSI tasks that require the integration of multiple skills, e.g., sitting down while carrying an object. To address this issue, we present TokenHSI, a single, unified transformer-based policy capable of multi-skill unification and flexible adaptation. The key insight is to model the humanoid proprioception as a separate shared token and combine it with distinct task tokens via a masking mechanism. Such a unified policy enables effective knowledge sharing across skills, thereby facilitating the multi-task training. Moreover, our policy architecture supports variable length inputs, enabling flexible adaptation of learned skills to new scenarios. By training additional task tokenizers, we can not only modify the geometries of interaction targets but also coordinate multiple skills to address complex tasks. The experiments demonstrate that our approach can significantly improve versatility, adaptability, and extensibility in various HSI tasks.

- project page

- paper

- code

-

abstract

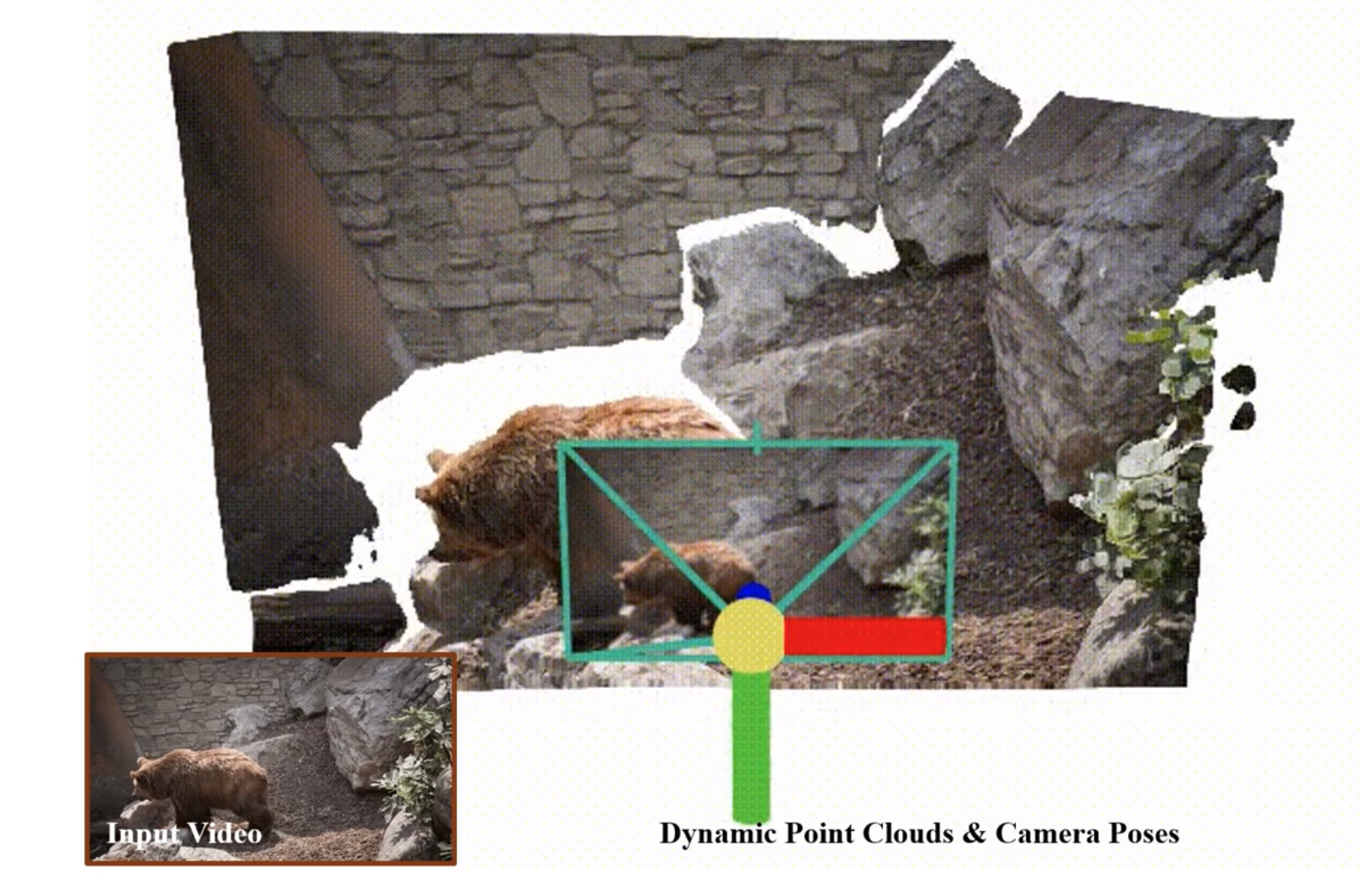

Recent developments in monocular depth estimation methods enable high-quality depth estimation of single-view images but fail to estimate consistent video depth across different frames. Recent works address this problem by applying a video diffusion model to generate video depth conditioned on the input video, which is training-expensive and can only produce scale-invariant depth values without camera poses. In this paper, we propose a novel video-depth estimation method called Align3R to estimate temporal consistent depth maps for a dynamic video. Our key idea is to utilize the recent DUSt3R model to align estimated monocular depth maps of different timesteps. First, we fine-tune the DUSt3R model with additional estimated monocular depth as inputs for the dynamic scenes. Then, we apply optimization to reconstruct both depth maps and camera poses. Extensive experiments demonstrate that Align3R estimates consistent video depth and camera poses for a monocular video with superior performance than baseline methods.

- project page

- paper

- code

-

abstract

Faithfully reconstructing textured shapes and physical properties from videos presents an intriguing yet challenging problem. Significant efforts have been dedicated to advancing such a system identification problem in this area. Previous methods often rely on heavy optimization pipelines with a differentiable simulator and renderer to estimate physical parameters. However, these approaches frequently necessitate extensive hyperparameter tuning for each scene and involve a costly optimization process, which limits both their practicality and generalizability. In this work, we propose a novel framework, Vid2Sim, a generalizable videobased approach for recovering geometry and physical properties through a mesh-free reduced simulation based on Linear Blend Skinning (LBS), offering high computational efficiency and versatile representation capability. Specifically, Vid2Sim first reconstructs the observed configuration of the physical system from video using a feed-forward neural network trained to capture physical world knowledge. A lightweight optimization pipeline then refines the estimated appearance, geometry, and physical properties to closely align with video observations within just a few minutes. Additionally, after the reconstruction, Vid2Sim enables highquality, mesh-free simulation with high efficiency. Extensive experiments demonstrate that our method achieves superior accuracy and efficiency in reconstructing geometry and physical properties from video data.

- project page

- paper

- code

-

abstract

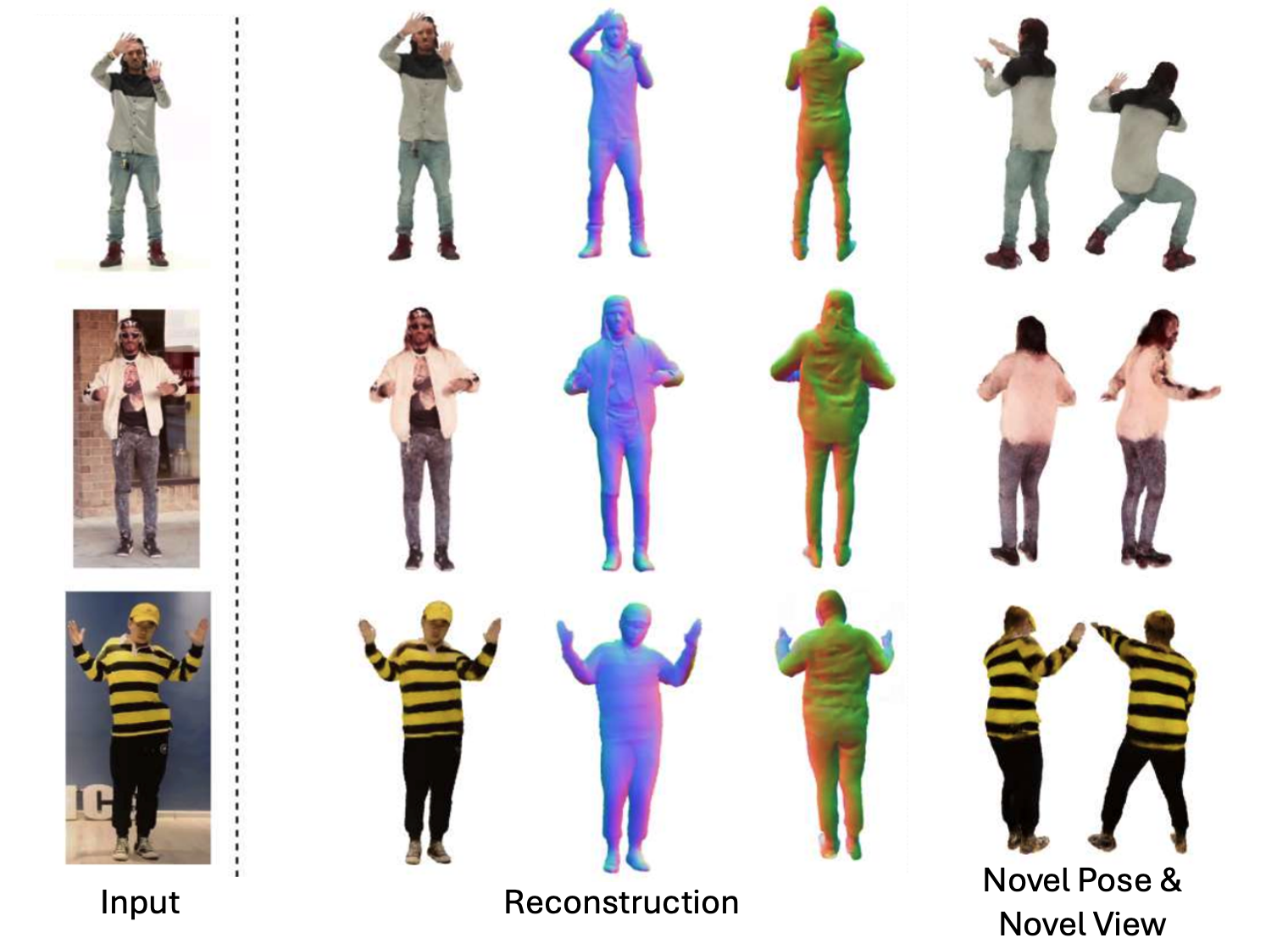

In this paper, we present WonderHuman to reconstruct dynamic human avatars from a monocular video for high-fidelity novel view synthesis. Previous dynamic human avatar reconstruction methods typically require the input video to have full coverage of the observed human body. However, in daily practice, one typically has access to limited viewpoints, such as monocular front-view videos, making it a cumbersome task for previous methods to reconstruct the unseen parts of the human avatar. To tackle the issue, we present WonderHuman, which leverages 2D generative diffusion model priors to achieve high-quality, photorealistic reconstructions of dynamic human avatars from monocular videos, including accurate rendering of unseen body parts. Our approach introduces a Dual-Space Optimization technique, applying Score Distillation Sampling (SDS) in both canonical and observation spaces to ensure visual consistency and enhance realism in dynamic human reconstruction. Additionally, we present a View Selection strategy and Pose Feature Injection to enforce the consistency between SDS predictions and observed data, ensuring pose-dependent effects and higher fidelity in the reconstructed avatar. In the experiments, our method achieves SOTA performance in producing photorealistic renderings from the given monocular video, particularly for those challenging unseen parts.

- project page

- paper

- code

-

abstract

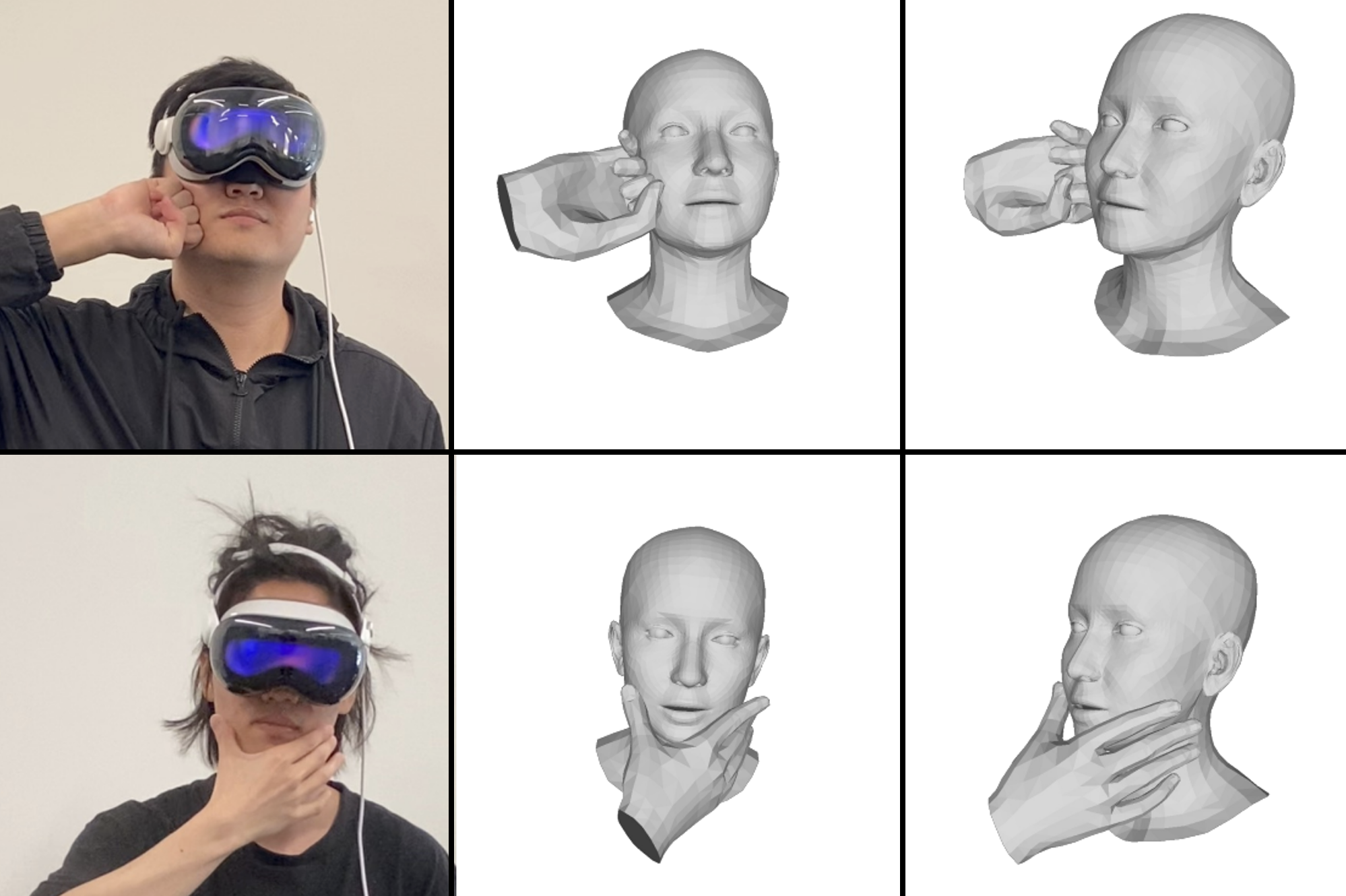

Reconstructing 3D hand-face interactions with deformations from a single image is a challenging yet crucial task with broad applications in AR, VR, and gaming. The challenges stem from self-occlusions during single-view hand-face interactions, diverse spatial relationships between hands and face, complex deformations, and the ambiguity of the single-view setting. The first and only method for hand-face interaction recovery, Decaf, introduces a global fitting optimization guided by contact and deformation estimation networks trained on studio-collected data with 3D annotations. However, Decaf suffers from a time-consuming optimization process and limited generalization capability due to its reliance on 3D annotations of hand-face interaction data. To address these issues, we present DICE, the first end-to-end method for Deformation-aware hand-face Interaction reCovEry from a single image. DICE estimates the poses of hands and faces, contacts, and deformations simultaneously using a Transformer-based architecture. It features disentangling the regression of local deformation fields and global mesh vertex locations into two network branches, enhancing deformation and contact estimation for precise and robust hand-face mesh recovery. To improve generalizability, we propose a weakly-supervised training approach that augments the training set using in-the-wild images without 3D ground-truth annotations, employing the depths of 2D keypoints estimated by off-the-shelf models and adversarial priors of poses for supervision. Our experiments demonstrate that DICE achieves state-of-the-art performance on a standard benchmark and in-the-wild data in terms of accuracy and physical plausibility. Additionally, our method operates at an interactive rate (20 fps) on an Nvidia 4090 GPU, whereas Decaf requires more than 15 seconds for a single image. Our code will be publicly available upon publication.

- project page

- paper

-

abstract

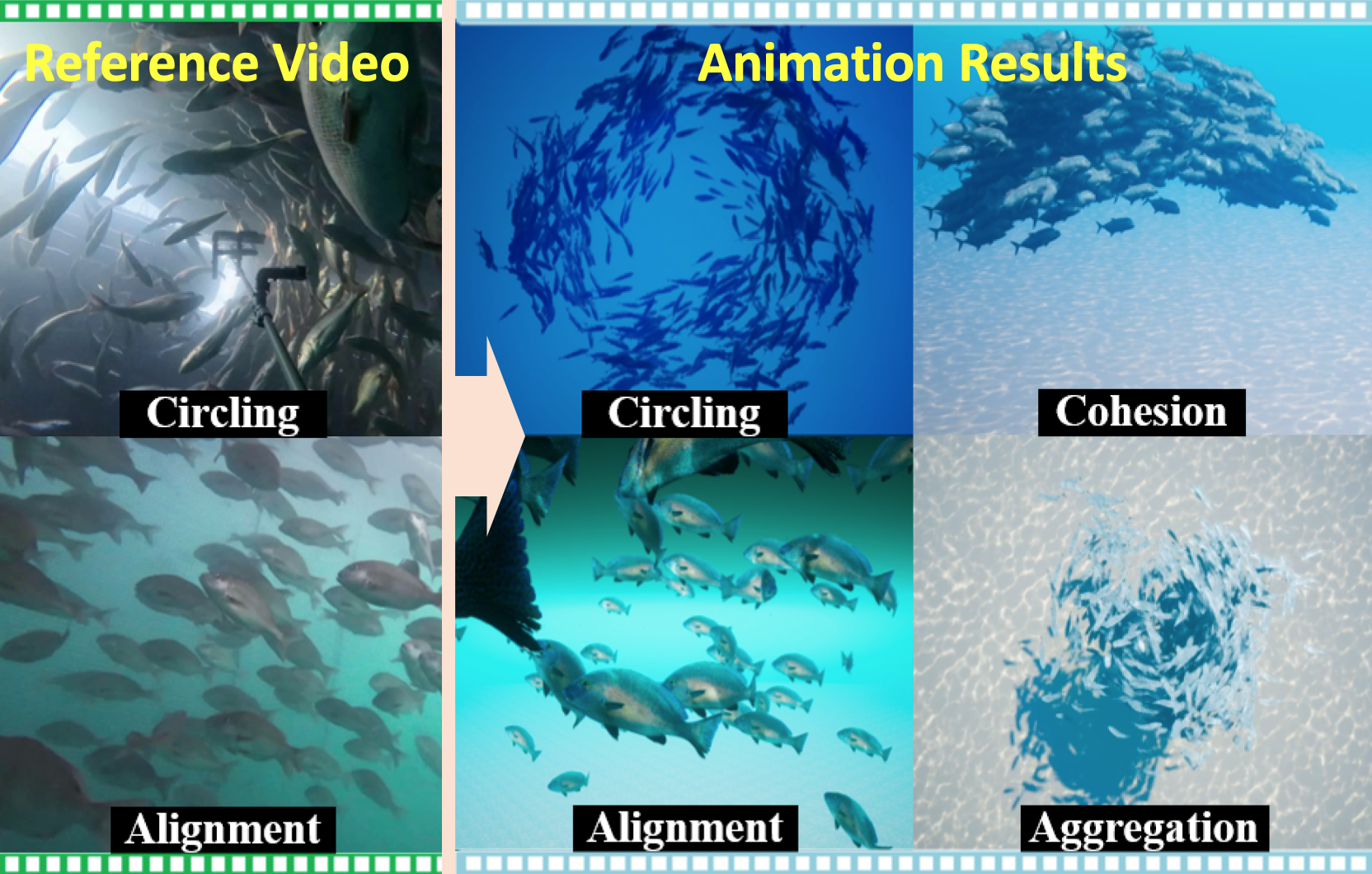

Reproducing realistic collective behaviors presents a captivating yet formidable challenge. Traditional rule-based methods rely on hand-crafted principles, limiting motion diversity and realism in generated collective behaviors. Recent imitation learning methods learn from data but often require ground truth motion trajectories and struggle with authenticity, especially in high-density groups with erratic movements. In this paper, we present a scalable approach, Collective Behavior Imitation Learning (CBIL), for learning fish schooling behavior directly from videos, without relying on captured motion trajectories. Our method first leverages Video Representation Learning, where a Masked Video AutoEncoder (MVAE) extracts implicit states from video inputs in a self-supervised manner. The MVAE effectively maps 2D observations to implicit states that are compact and expressive for following the imitation learning stage. Then, we propose a novel adversarial imitation learning method to effectively capture complex movements of the schools of fish, allowing for efficient imitation of the distribution for motion patterns measured in the latent space. It also incorporates bio-inspired rewards alongside priors to regularize and stabilize training. Once trained, CBIL can be used for various animation tasks with the learned collective motion priors. We further show its effectiveness across different species. Finally, we demonstrate the application of our system in detecting abnormal fish behavior from in-the-wild videos.

- project page

- paper

-

abstract

Modeling temporal characteristics and the non-stationary dynamics of body movement plays a significant role in predicting human future motions. However, it is challenging to capture these features due to the subtle transitions involved in the complex human motions. This paper introduces MotionWavelet, a human motion prediction framework that utilizes Wavelet Transformation and studies human motion patterns in the spatial-frequency domain. In MotionWavelet, a Wavelet Diffusion Model (WDM) learns a Wavelet Manifold by applying Wavelet Transformation on the motion data therefore encoding the intricate spatial and temporal motion patterns. Once the Wavelet Manifold is built, WDM trains a diffusion model to generate human motions from Wavelet latent vectors. In addition to the WDM, MotionWavelet also presents a Wavelet Space Shaping Guidance mechanism to refine the denoising process to improve conformity with the manifold structure. WDM also develops Temporal Attention-Based Guidance to enhance prediction accuracy. Extensive experiments validate the effectiveness of MotionWavelet, demonstrating improved prediction accuracy and enhanced generalization across various benchmarks. Our code and models will be released upon acceptance.

- project page

- paper

- code

-

abstract

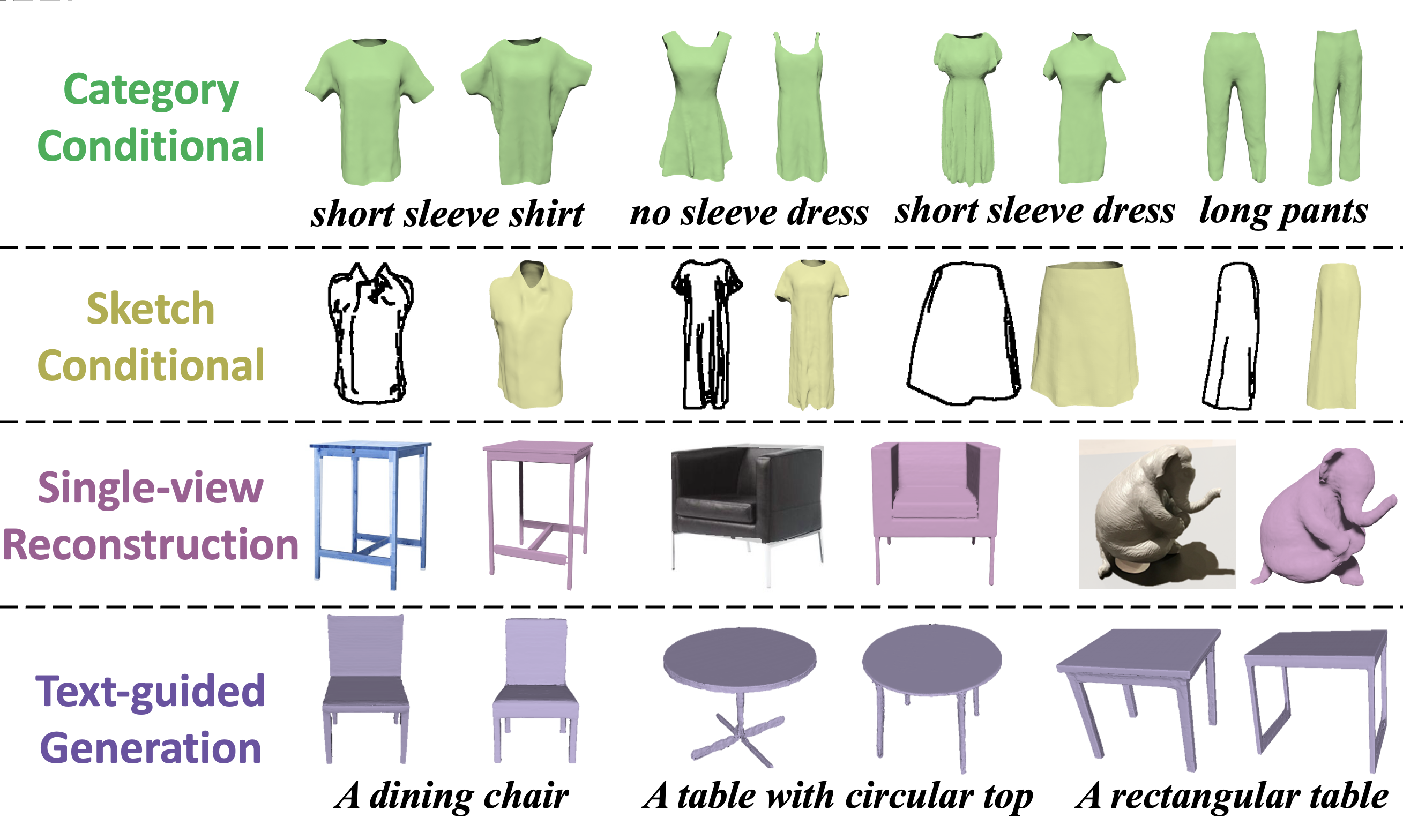

In this paper, we present Surf-D, a novel method for generating high-quality 3D shapes as Surface with arbitrary topologies using Diffusion models. Specifically, we adopt Unsigned Distance Field (UDF) as the surface representation, as it excels in handling arbitrary topologies, enabling the generation of complex shapes. While the prior methods explored shape generation with different representations, they suffer from limited topologies and geometry details. Moreover, it's non-trivial to directly extend prior diffusion models to UDF because they lack spatial continuity due to the discrete volume structure. However, UDF requires accurate gradients for mesh extraction and learning. To tackle the issues, we first leverage a point-based auto-encoder to learn a compact latent space, which supports gradient querying for any input point through differentiation to effectively capture intricate geometry at a high resolution. Since the learning difficulty for various shapes can differ, a curriculum learning strategy is employed to efficiently embed various surfaces, enhancing the whole embedding process. With pretrained shape latent space, we employ a latent diffusion model to acquire the distribution of various shapes. Our approach demonstrates superior performance in shape generation across multiple modalities and conducts extensive experiments in unconditional generation, category conditional generation, 3D reconstruction from images, and text-to-shape tasks. Our code will be publicly available upon paper publication.

- project page

- paper

- video

- code

-

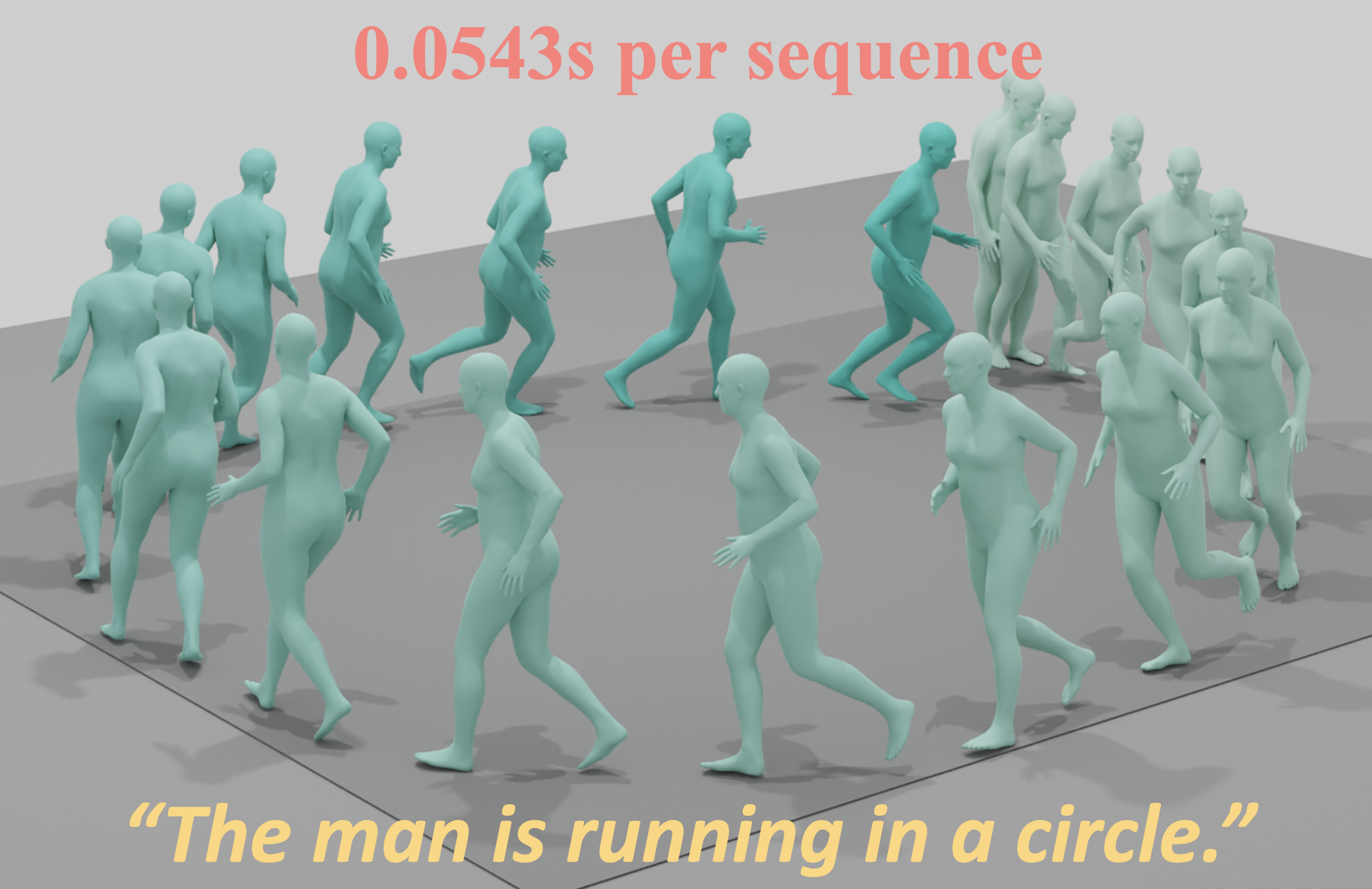

abstract

We introduce Efficient Motion Diffusion Model (EMDM) for fast and high-quality human motion generation. Although previous motion diffusion models have shown impressive results, they struggle to achieve fast generation while maintaining high-quality human motions. Motion latent diffusion has been proposed for efficient motion generation. However, effectively learning a latent space can be non-trivial in such a two-stage manner. Meanwhile, accelerating motion sampling by increasing the step size, e.g., DDIM, typically leads to a decline in motion quality due to the inapproximation of complex data distributions when naively increasing the step size. In this paper, we propose EMDM that allows for much fewer sample steps for fast motion generation by modeling the complex denoising distribution during multiple sampling steps. Specifically, we develop a Conditional Denoising Diffusion GAN to capture multimodal data distributions conditioned on both control signals, i.e., textual description and denoising time step. By modeling the complex data distribution, a larger sampling step size and fewer steps are achieved during motion synthesis, significantly accelerating the generation process. To effectively capture the human dynamics and reduce undesired artifacts, we employ motion geometric loss during network training, which improves the motion quality and training efficiency. As a result, EMDM achieves a remarkable speed-up at the generation stage while maintaining high-quality motion generation in terms of fidelity and diversity.

- project page

- paper

- video

- code

-

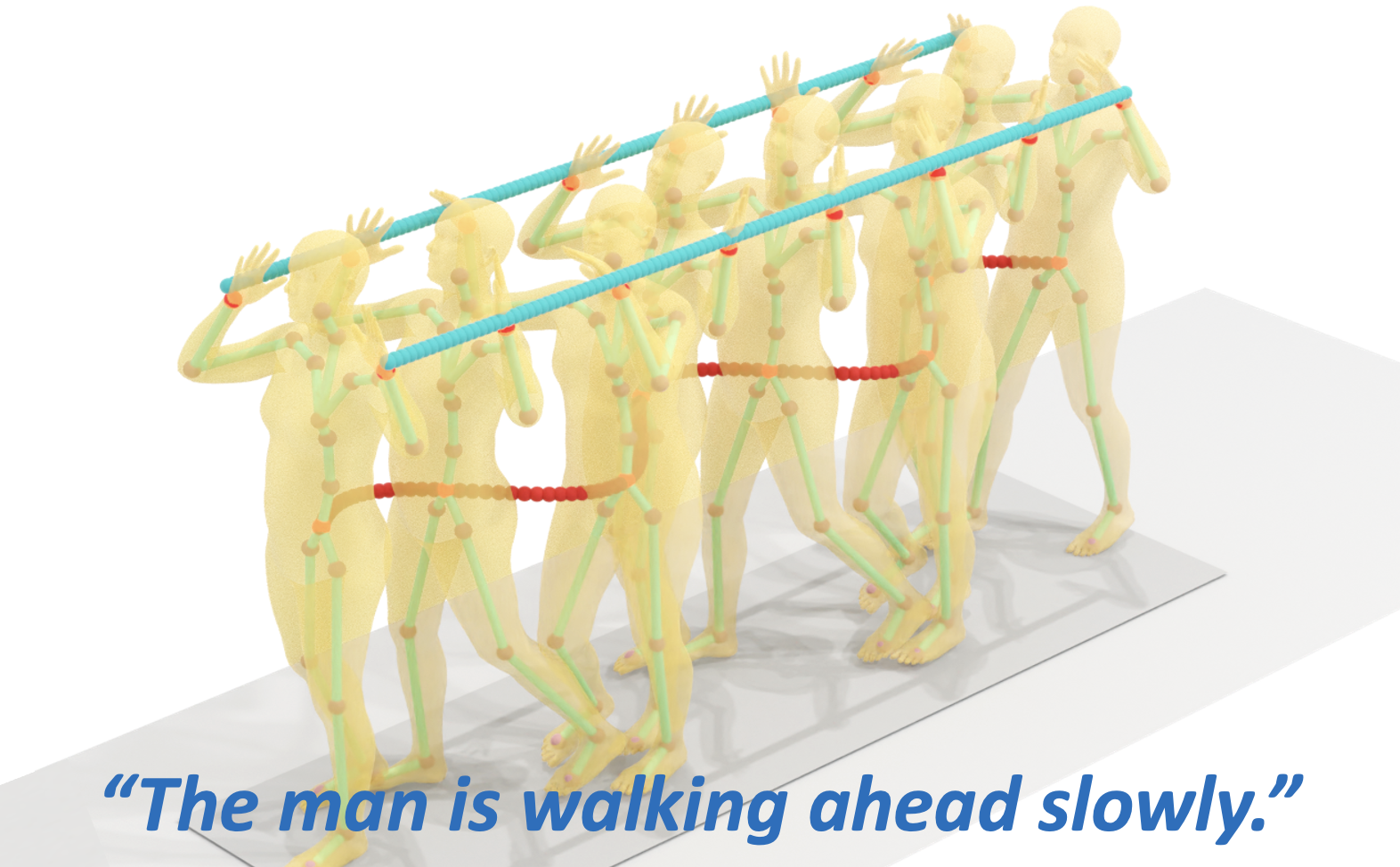

abstract

Controllable human motion synthesis is essential for applications in AR/VR, gaming, movies, and embodied AI. Existing methods often focus solely on either language or full trajectory control, lacking precision in synthesizing motions aligned with user-specified trajectories, especially for multi-joint control. To address these issues, we present TLControl, a new method for realistic human motion synthesis, incorporating both low-level trajectory and high-level language semantics controls. Specifically, we first train a VQ-VAE to learn a compact latent motion space organized by body parts. We then propose a Masked Trajectories Transformer to make coarse initial predictions of full trajectories of joints based on the learned latent motion space, with user-specified partial trajectories and text descriptions as conditioning. Finally, we introduce an efficient test-time optimization to refine these coarse predictions for accurate trajectory control. Experiments demonstrate that TLControl outperforms the state-of-the-art in trajectory accuracy and time efficiency, making it practical for interactive and high-quality animation generation.

- project page

- paper

- code

-

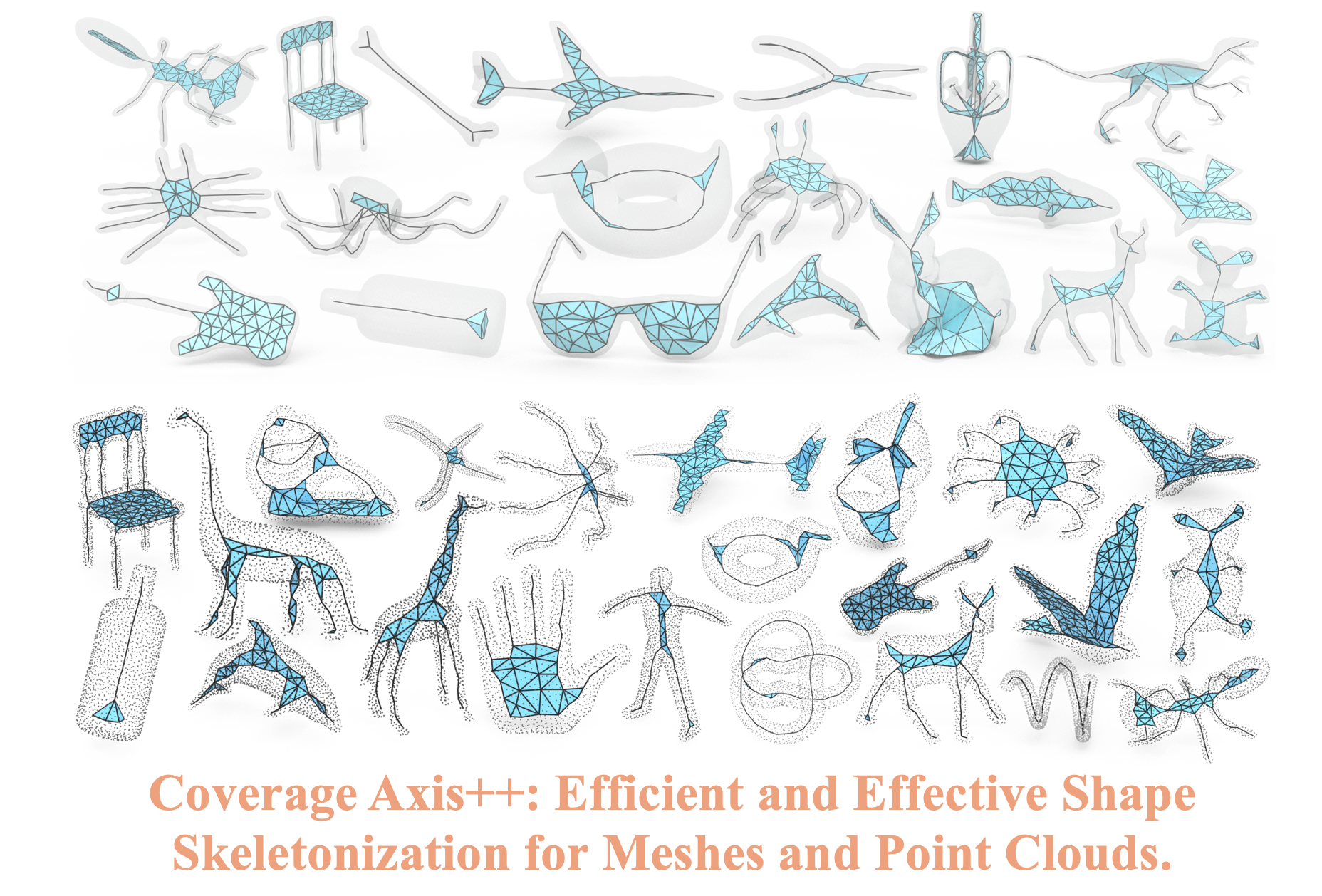

abstract

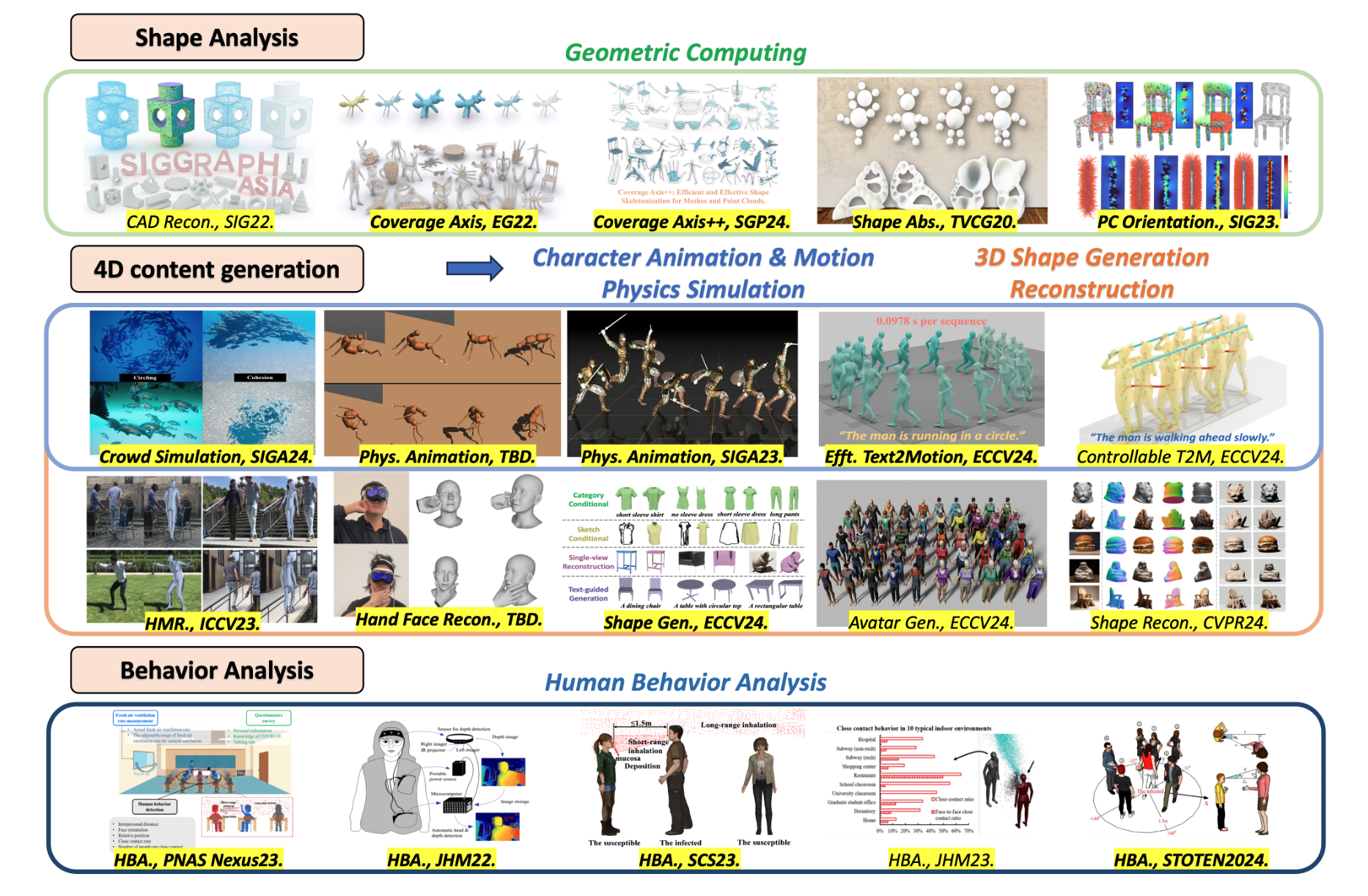

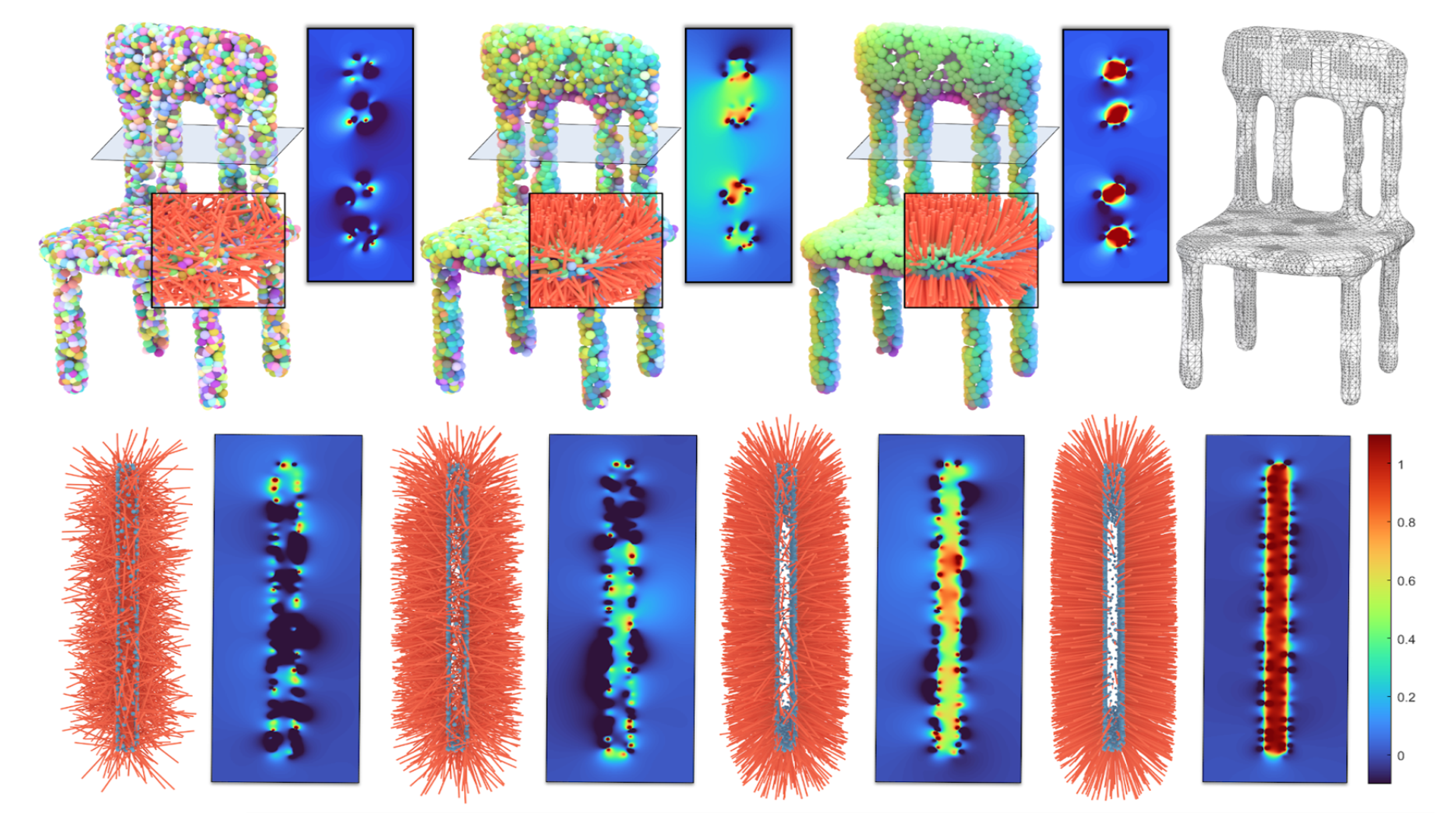

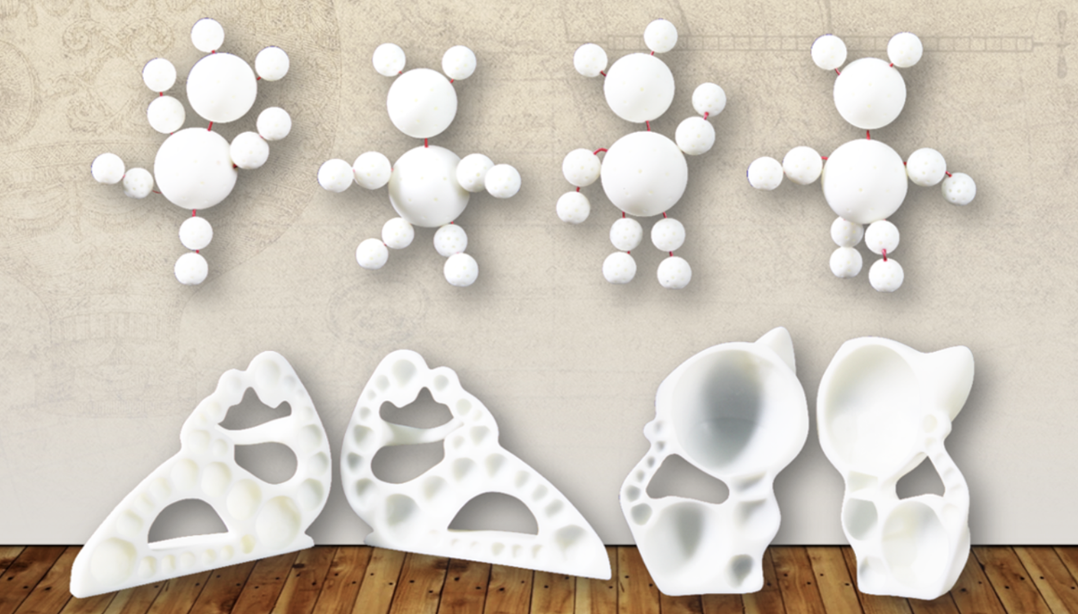

We introduce Coverage Axis++, a novel and efficient approach to 3D shape skeletonization. The current state-of-the-art approaches for this task often rely on the watertightness of the input or suffer from substantial computational costs, thereby limiting their practicality. To address this challenge, Coverage Axis++ proposes a heuristic algorithm to select skeletal points, offering a high-accuracy approximation of the Medial Axis Transform (MAT) while significantly mitigating computational intensity for various shape representations. We introduce a simple yet effective strategy that considers both shape coverage and uniformity to derive skeletal points. The selection procedure enforces consistency with the shape structure while favoring the dominant medial balls, which thus introduces a compact underlying shape representation in terms of MAT. As a result, Coverage Axis++ allows for skeletonization for various shape representations (e.g., water-tight meshes, triangle soups, point clouds), specification of the number of skeletal points, few hyperparameters, and highly efficient computation with improved reconstruction accuracy. Extensive experiments across a wide range of 3D shapes validate the efficiency and effectiveness of Coverage Axis++. The code will be publicly available once the paper is published.

- project page

- paper

- code

- Hugging Face Demo

-

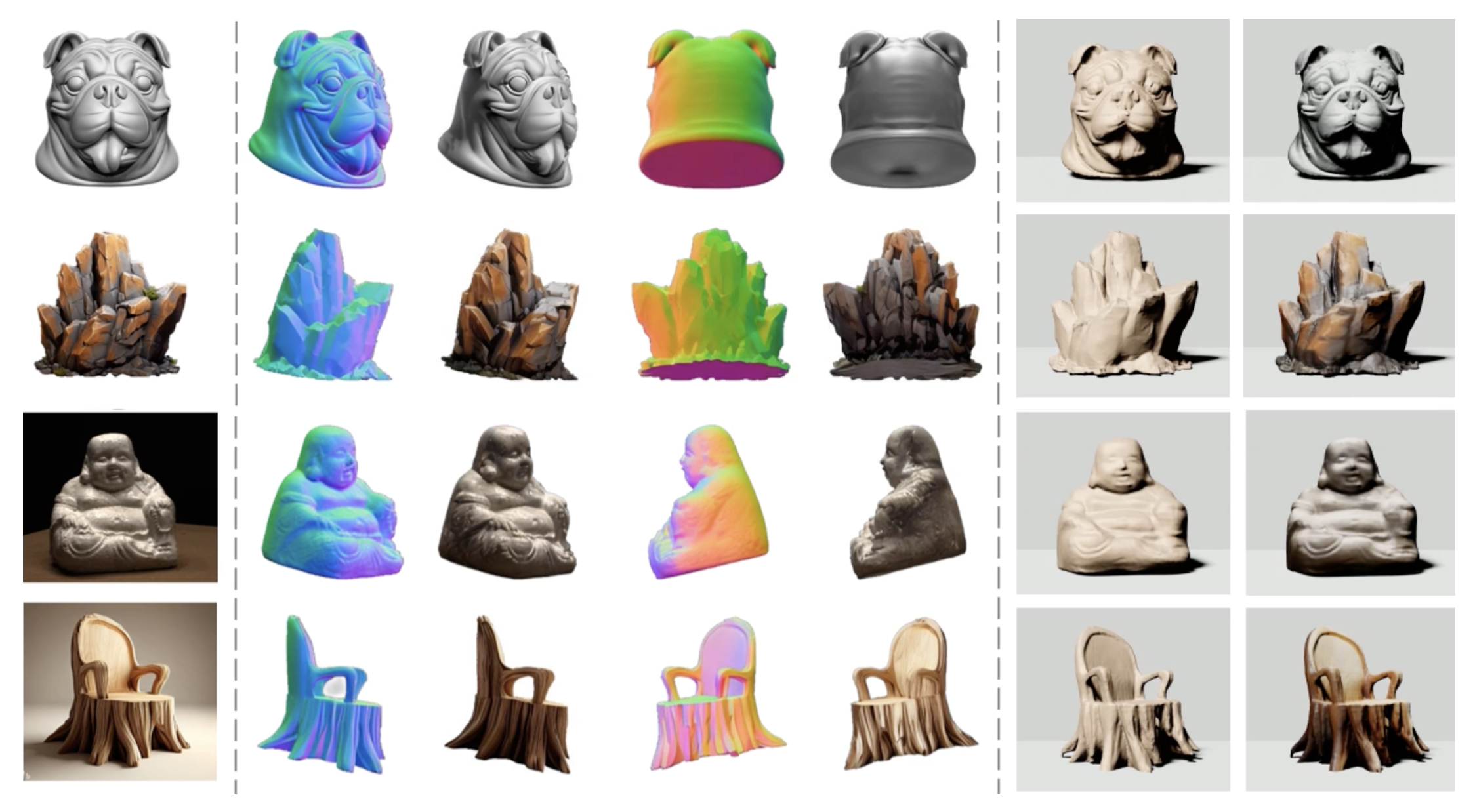

abstract

In this work, we introduce Wonder3D, a novel method for efficiently generating high-fidelity textured meshes from single-view images.Recent methods based on Score Distillation Sampling (SDS) have shown the potential to recover 3D geometry from 2D diffusion priors, but they typically suffer from time-consuming per-shape optimization and inconsistent geometry. In contrast, certain works directly produce 3D information via fast network inferences, but their results are often of low quality and lack geometric details. To holistically improve the quality, consistency, and efficiency of image-to-3D tasks, we propose a cross-domain diffusion model that generates multi-view normal maps and the corresponding color images. To ensure consistency, we employ a multi-view cross-domain attention mechanism that facilitates information exchange across views and modalities. Lastly, we introduce a geometry-aware normal fusion algorithm that extracts high-quality surfaces from the multi-view 2D representations. Our extensive evaluations demonstrate that our method achieves high-quality reconstruction results, robust generalization, and reasonably good efficiency compared to prior works.

- project page

- paper

- video

- code

-

abstract

We present C·ASE, an efficient and effective framework that learns Conditional Adversarial Skill Embeddings for physics-based characters. C·ASE enables the physically simulated character to learn a diverse repertoire of skills while providing controllability in the form of direct manipulation of the skills to be performed. This is achieved by dividing the heterogeneous skill motions into distinct subsets containing homogeneous samples for training a low-level conditional model to learn the conditional behavior distribution. The skill-conditioned imitation learning naturally offers explicit control over the character’s skills after training. The training course incorporates the focal skill sampling, skeletal residual forces, and element-wise feature masking to balance diverse skills of varying complexities, mitigate dynamics mismatch to master agile motions and capture more general behavior characteristics, respectively. Once trained, the conditional model can produce highly diverse and realistic skills, outperforming state-of-the-art models, and can be repurposed in various downstream tasks. In particular, the explicit skill control handle allows a high-level policy or a user to direct the character with desired skill specifications, which we demonstrate is advantageous for interactive character animation.

- project page

- paper

- code

-

abstract

In this paper, we introduce a set of simple yet effective TOken REduction (TORE) strategies for Transformer-based Human Mesh Recovery from monocular images. Current SOTA performance is achieved by Transformer-based structures. However, they suffer from high model complexity and computation cost caused by redundant tokens. We propose token reduction strategies based on two important aspects, i.e., the 3D geometry structure and 2D image feature, where we hierarchically recover the mesh geometry with priors from body structure and conduct token clustering to pass fewer but more discriminative image feature tokens to the Transformer. Our method massively reduces the number of tokens involved in high-complexity interactions in the Transformer. This leads to a significantly reduced computational cost while still achieving competitive or even higher accuracy in shape recovery. Extensive experiments across a wide range of benchmarks validate the superior effectiveness of the proposed method. We further demonstrate the generalizability of our method on hand mesh recovery. Our code will be publicly available once the paper is published.

- project page

- paper

- video

- code

-

abstract

Estimating normals with globally consistent orientations for a raw point cloud has many downstream geometry processing applications. Despite tremendous efforts in the past decades, it remains challenging to deal with an unoriented point cloud with various imperfections, particularly in the presence of data sparsity coupled with nearby gaps or thin-walled structures. In this paper, we propose a smooth objective function to characterize the requirements of an acceptable winding-number field, which allows one to find the globally consistent normal orientations starting from a set of completely random normals. By taking the vertices of the Voronoi diagram of the point cloud as examination points, we consider the following three requirements: (1) the winding number is either 0 or 1, (2) the occurrences of 1 and the occurrences of 0 are balanced around the point cloud, and (3) the normals align with the outside Voronoi poles as much as possible. Extensive experimental results show that our method outperforms the existing approaches, especially in handling sparse and noisy point clouds, as well as shapes with complex geometry/topology.

- project page

- paper

- code

- suppl.

-

abstract

In this paper, we present a simple yet effective formulation called Coverage Axis for 3D shape skeletonization. Inspired by the set cover problem, our key idea is to cover all the surface points using as few inside medial balls as possible. This formulation inherently induces a compact and expressive approximation of the Medial Axis Transform (MAT) of a given shape. Different from previous methods that rely on local approximation error, our method allows a global consideration of the overall shape structure, leading to an efficient high-level abstraction and superior robustness to noise. Another appealing aspect of our method is its capability to handle more generalized input such as point clouds and poor-quality meshes. Extensive comparisons and evaluations demonstrate the remarkable effectiveness of our method for generating compact and expressive skeletal representation to approximate the MAT.

- paper

-

abstract

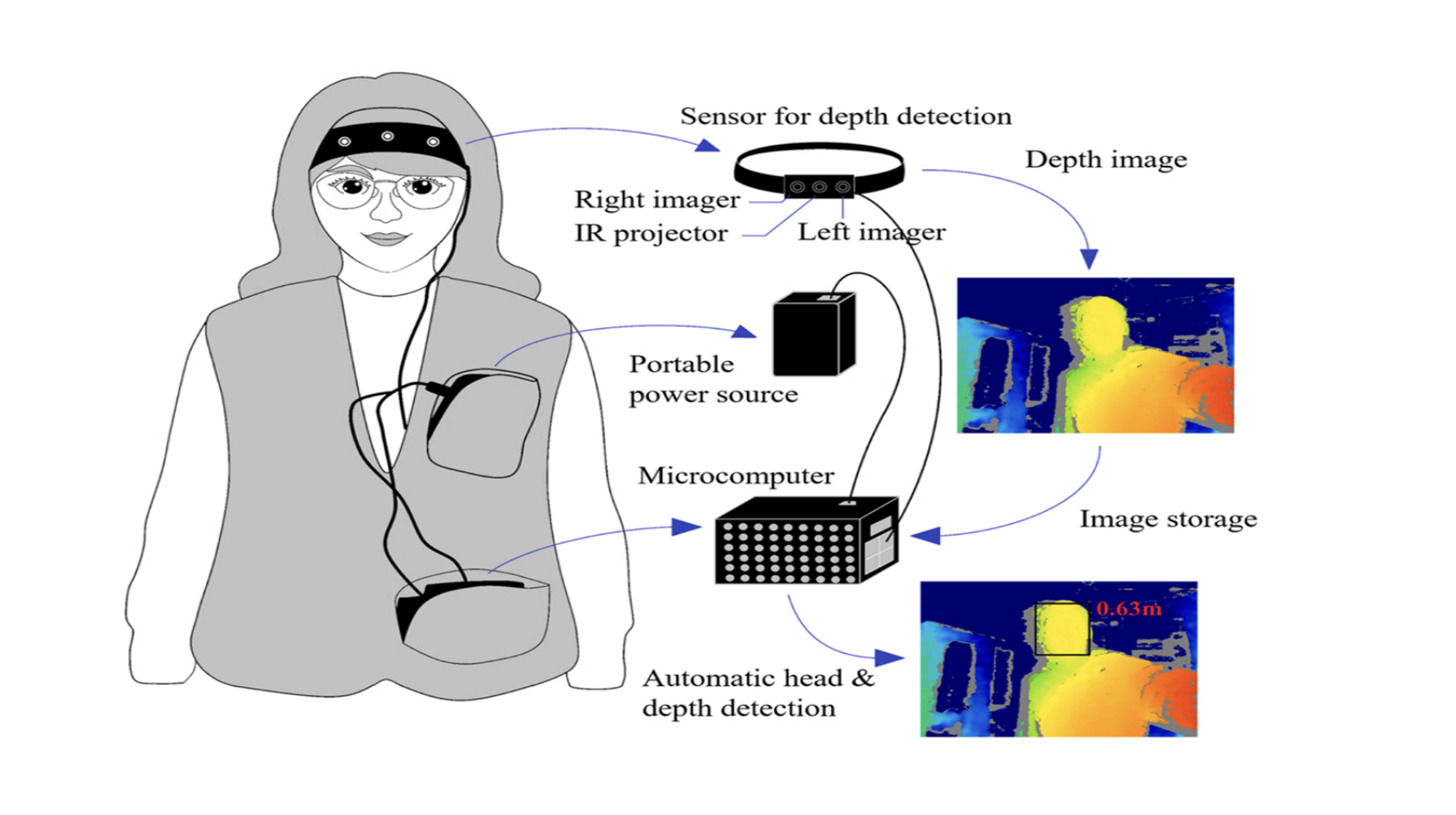

The risk of COVID-19 infection has increased due to the prolonged duration of travel and frequent close interactions due to popularization of railway transportations. This study utilized depth detection devices to analyze the close contact behaviors of passengers in high-speed train (HST), traditional trains (TT), waiting area in waiting room (WWR), and ticket check area in waiting room (CWR). A multi-route COVID-19 transmission model was developed to assess the risk of virus exposure in these scenarios under various non-pharmaceutical interventions. A total of 163,740 seconds of data was collected. The close contact ratios in HST, TT, WWR, and CWR was 5.8%, 64.0%, 7.7%, and 49.0%, respectively. The average interpersonal distance between passengers was 0.85 m, 0.92 m, 1.25 m, and 0.88 m, respectively. The probability of face-to-face contact was 9.5%, 70.0%, 64.2%, and 5.8% across each environment, respectively. When all passengers wore N95 respirators and surgical masks, the personal virus exposure via close contact can be reduced by 94.1% and 51.9%, respectively. The virus exposure in TT is about dozens of times of it in HST. In China, if all current railway traffic was replaced by HST, the total virus exposure of passengers can be reduced by roughly 50%.

- project page

- paper

- press release

-

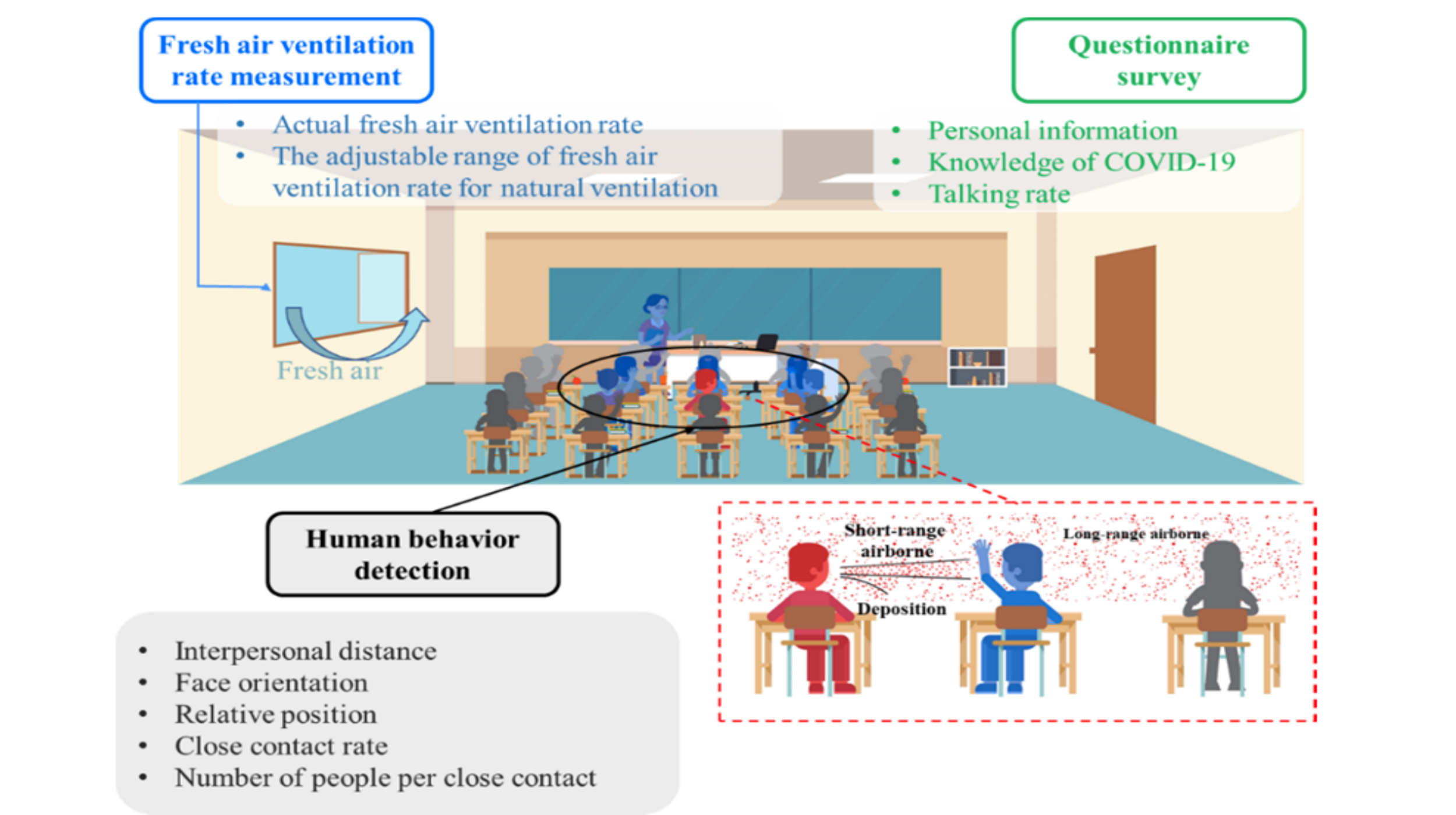

abstract

Classrooms are high-risk indoor environments, so analysis of SARS-CoV-2 transmission in classrooms is important for determining optimal interventions. Due to the absence of human behavior data, it is challenging to accurately determine virus exposure in classrooms. A wearable device for close contact behavior detection was developed, and we recorded more than 250-thousand data points of close contact behaviors of students from Grades 1 through 12. Combined with a survey on students’ behaviors, we analyzed virus transmission in classrooms. Close contact rates for students were 37%±11% during classes and 48%±13% during breaks. Students in lower grades had higher close contact rates and virus transmission potential. The long-range airborne transmission route is dominant, accounting for 90%±3.6% and 75%±7.7% with and without mask wearing, respectively. During breaks, the short-range airborne route became more important, contributing 48%±3.1% in grades 1 to 9 (without wearing masks). Ventilation alone cannot always meet the demands of COVID-19 control, 30 m3/h/person is suggested as the threshold outdoor air ventilation rate in classroom. This study provides scientific support for COVID-19 prevention and control in classrooms, and our proposed human behavior detection and analysis methods offer a powerful tool to understand virus transmission characteristics, and can be employed in various indoor environments.

- project page

- paper

-

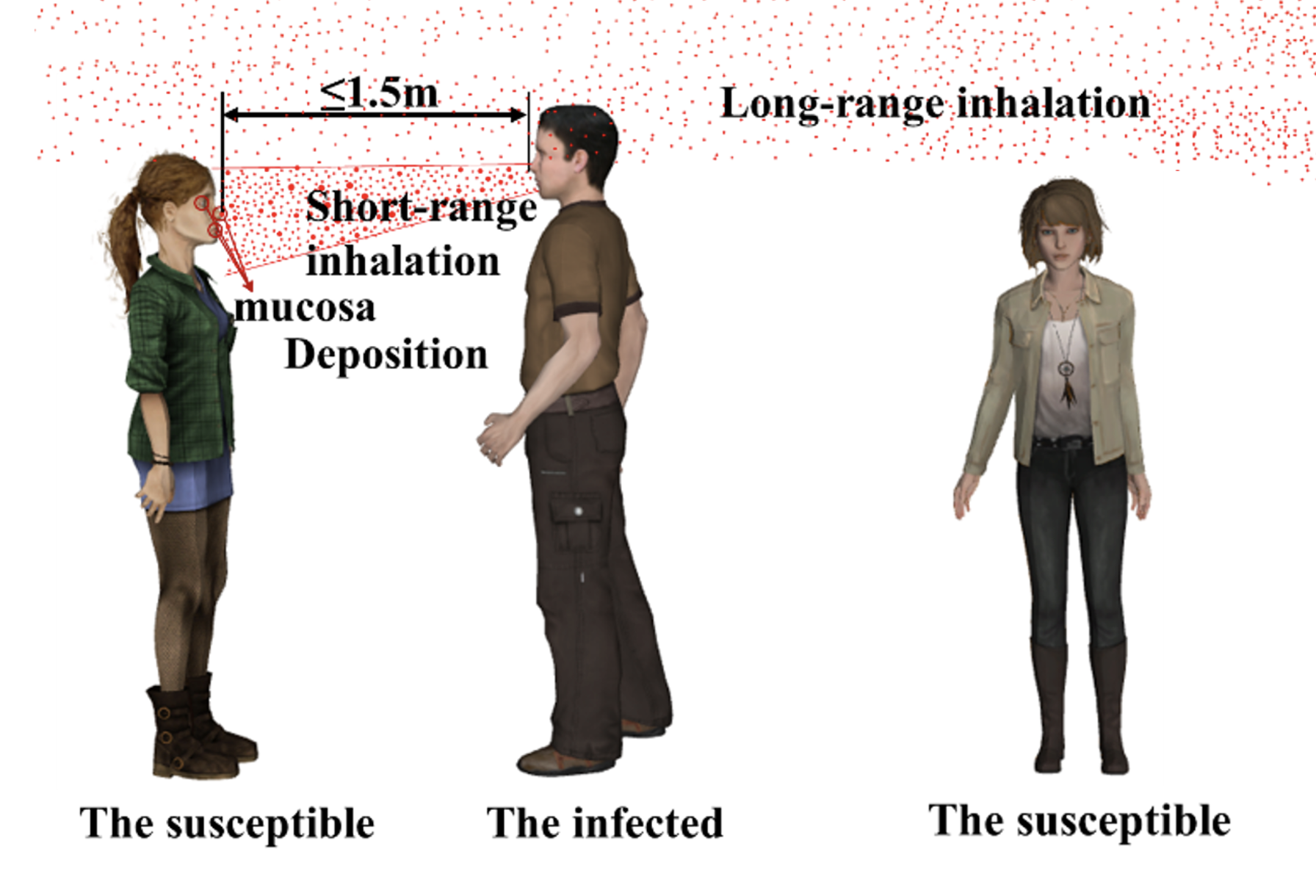

abstract

During COVID-19 pandemic, analysis on virus exposure and intervention efficiency in public transports based on real passenger’s close contact behaviors is critical to curb infectious disease transmission. A monitoring device was developed to gather a total of 145,821 close contact data in subways based on semi-supervision learning. A virus transmission model considering both short- and long-range inhalation and deposition was established to calculate the virus exposure. During rush-hour, short-range inhalation exposure is 3.2 times higher than deposition exposure and 7.5 times higher than long-range inhalation exposure of all passengers in the subway. The close contact rate was 56.1 % and the average interpersonal distance was 0.8 m. Face-to-back was the main pattern during close contact. Comparing with random distribution, if all passengers stand facing in the same direction, personal virus exposure through inhalation (deposition) can be reduced by 74.1 % (98.5 %). If the talk rate was decreased from 20 % to 5 %, the inhalation (deposition) exposure can be reduced by 69.3 % (73.8 %). In addition, we found that virus exposure could be reduced by 82.0 % if all passengers wear surgical masks. This study provides scientific support for COVID-19 prevention and control in subways based on real human close contact behaviors.

- paper

-

abstract

Motivated by the fact that the medial axis transform is able to encode nearly the complete shape, we propose to use as few medial balls as possible to approximate the original enclosed volume by the boundary surface. We progressively select new medial balls, in a top-down style, to enlarge the region spanned by the existing medial balls. The key spirit of the selection strategy is to encourage large medial balls while imposing given geometric constraints. We further propose a speedup technique based on a provable observation that the intersection of medial balls implies the adjacency of power cells (in the sense of the power crust). We further elaborate the selection rules in combination with two closely related applications. One application is to develop an easy-to-use ball-stick modeling system that helps non-professional users to quickly build a shape with only balls and wires, but any penetration between two medial balls must be suppressed. The other application is to generate porous structures with convex, compact (with a high isoperimetric quotient) and shape-aware pores where two adjacent spherical pores may have penetration as long as the mechanical rigidity can be well preserved.

Shuyang Xu, Zhiyang Dou†§, Yiduo Hao, Minghao Guo, Liang Pan, Jingbo Wang, Cheng Lin, Yuan Liu, Wenping Wang, Chuang Gan, Mingmin Zhao, Wojciech Matusik, Taku Komura†.

§ Work done during Summer 2025.

Libo Zhang, Zekun Li, Tianyu Li, Zeyu Cao, Rui Xu, Xiao-Xiao Long, Wenjia Wang, Jingbo Wang, Yuan Liu, Wenping Wang, Daquan Zhou, Taku Komura, Zhiyang Dou†§.

§ Work done during Summer 2025.

Qingxuan Wu, Zhiyang Dou, Chuan Guo, Yiming Huang, Qiao Feng, Bing Zhou, Jian Wang, Lingjie Liu.

ICLR 2026.

Shuyang Xu*, Zhiyang Dou*†, Mingyi Shi, Liang Pan, Leo Ho, Jingbo Wang, Yuan Liu, Cheng Lin, Yuexin Ma, Wenping Wang†, Taku Komura†.

NeurIPS 2025 (Spotlight). 13% of accepted papers.

Zhiyang Dou*, Chen Peng*, Xinyu Lu, Xiaohan Ye, Lixing Fang, Yuan Liu, Wenping Wang, Chuang Gan, Lingjie Liu, Taku Komura†.

ACM Transactions on Graphics. SIGGRAPH Asia 2025.

Yiming Dong*, Hongxu Xin*, Zhiyang Dou†, Rui Xu, Yuan Liu, Shuangmin Chen, Shiqing Xin, Changhe Tu†, Taku Komura, Wenping Wang.

ACM Transactions on Graphics. SIGGRAPH Asia 2025.

Yiming Huang, Zhiyang Dou, Lingjie Liu.

ICCV 2025.

Ke Fan, Shulin Lu, Minyue Dai, Runyi Yu, Lixing Xiao, Zhiyang Dou, Junting Dong, Lizhuang Ma, Jingbo Wang

ICCV 2025 (Highlight).

Liang Pan, Zeshi Yang, Zhiyang Dou, Wenjia Wang, Buzhen Huang, Bo Dai, Taku Komura, Jingbo Wang.

CVPR 2025 (Oral). 3.3% of accepted papers.

Also the 1st Workshop on Humanoid Agents at CVPR 2025 Spotlight.

Jiahao Lu*, Tianyu Huang*, Peng Li, Zhiyang Dou, Cheng Lin, Zhiming Cui, Zhen Dong, Sai-Kit Yeung, Wenping Wang, Yuan Liu.

CVPR 2025 (Highlight). 13.5% of accepted papers.

Chuhao Chen, Zhiyang Dou, Chen Wang, Yiming Huang, Anjun Chen, Qiao Feng, Jiatao Gu, Lingjie Liu.

CVPR 2025.

Zilong Wang, Zhiyang Dou†, Yuan Liu, Cheng Lin, Xiao Dong, Yunhui Guo, Chenxu Zhang, Xin Li, Wenping Wang, Xiaohu Guo†.

IEEE Transactions on Visualization and Computer Graphics 2025.

Qingxuan Wu, Zhiyang Dou†, Sirui Xu, Soshi Shimada, Chen Wang, Zhengming Yu, Yuan Liu, Cheng Lin, Zeyu Cao, Taku Komura, Vladislav Golyanik, Christian Theobalt, Wenping Wang, Lingjie Liu†.

ICLR 2025.

Zhou Xian* , Yiling Qiao*, Zhenjia Xu*, Tsun-Hsuan Wang*, Zhehuan Chen*, Juntian Zheng*, Ziyan Xiong*, Yian Wang*, Mingrui Zhang*, Pingchuan Ma*, Yufei Wang*, Zhiyang Dou*, etc.

Zhiyang Dou.

Doctoral Consortium — ECCV 2024 and EUROGRAPHICS 2025.

🗺️👆Click the figure for an overview.

Yifan Wu*, Zhiyang Dou*, Yuko Ishiwaka, Shun Ogawa, Yuke Lou, Wenping Wang, Lingjie Liu, Taku Komura.

ACM Transactions on Graphics. SIGGRAPH ASIA 2024.

Yuming Feng*, Zhiyang Dou*†, Ling-Hao Chen, Yuan Liu, Tianyu Li, Jingbo Wang, Zeyu Cao, Wenping Wang, Taku Komura, Lingjie Liu†.

Arxiv 2024.

Zhengming Yu*, Zhiyang Dou*, Xiaoxiao Long, Cheng Lin, Zekun Li, Yuan Liu, Norman Müller, Taku Komura, Marc Habermann, Christian Theobalt, Xin Li, Wenping Wang.

ECCV 2024.

Wenyang Zhou, Zhiyang Dou†, Zeyu Cao, Zhouyingcheng Liao, Jingbo Wang, Wenjia Wang, Yuan Liu, Taku Komura, Wenping Wang, Lingjie Liu.

ECCV 2024.

† Project Lead.

Weilin Wan, Zhiyang Dou, Taku Komura, Wenping Wang, Dinesh Jayaraman, Lingjie Liu.

ECCV 2024.

Zimeng Wang*, Zhiyang Dou*, Rui Xu, Cheng Lin, Yuan Liu, Xiaoxiao Long, Shiqing Xin, Taku Komura, Xiaoming Yuan, Wenping Wang.

ACM SIGGRAPH/Eurographics Symposium on Geometry Processing 2024.

A follow-up of Coverage Axis.

Xiaoxiao Long*, Yuanchen Guo*, Cheng Lin, Yuan Liu, Zhiyang Dou, Lingjie Liu, Yuexin Ma, Song-Hai Zhang, Marc Habermann, Christian Theobalt, Wenping Wang.

CVPR 2024 (Highlight). 11.9% of accepted papers.

Zhiyang Dou, Xuelin Chen, Qingnan Fan, Taku Komura, Wenping Wang.

SIGGRAPH Asia 2023.

Zhiyang Dou*, Qingxuan Wu*, Cheng Lin, Zeyu Cao, Qiangqiang Wu, Weilin Wan, Taku Komura, Wenping Wang.

ICCV 2023.

Rui Xu, Zhiyang Dou, Ningna Wang, Shiqing Xin, Shuangmin Chen, Mingyan Jiang, Xiaohu Guo, Wenping Wang, Changhe Tu.

ACM Transactions on Graphics. SIGGRAPH 2023.

SIGGRAPH 2023 Best Paper Award; See more here.

Zhiyang Dou, Cheng Lin, Rui Xu, Lei Yang, Shiqing Xin, Taku Komura, Wenping Wang.

Computer Graphics Forum. EUROGRAPHICS 2022.

Top Cited Article in CGF 2022-2023. [Link]

Fast-Forward Attendees Award at EG22, 2nd Place.

Nan Zhang, Xiyue Liu, Shuyi Gao, Boni Su, Zhiyang Dou†.

Sustainable Cities and Society (SCS) 2023.

Yong Guo*, Zhiyang Dou*, Nan Zhang, Xiyue Liu, Boni Su, Yuguo Li, Yinping Zhang.

PNAS Nexus 2023.

This research has been featured in a press release by EurekAlert!

Xiyue Liu*, Zhiyang Dou*, Lei Wang, Boni Su, Tianyi Jin, Yong Guo, Jianjian Wei, Nan Zhang.

Journal of Hazardous Materials (JHM) 2022.

Zhiyang Dou, Shiqing Xin, Rui Xu, Jian Xu, Yuanfeng Zhou, Shuangmin Chen, Wenping Wang, Xiuyang Zhao, Changhe Tu.

IEEE Transactions on Visualization and Computer Graphics 2020.

Tutorials

ICCV 2025.

- project page

-

abstract

Human motion generation is an important area of research with applications in virtual reality, gaming, animation, robotics, and AI-driven content creation. Generating realistic and controllable human motion is essential for creating interactive digital environments, improving character animation, and enhancing human-computer interaction. Recent advances in deep learning have made it possible to automate motion generation, reducing the need for expensive motion capture and manual animation. Techniques such as diffusion models, generative masked modeling, and variational autoencoders (VAEs) have been used to synthesize diverse and realistic human motion. Transformer-based models have improved the ability to capture temporal dependencies, leading to smoother and more natural movement. In addition, reinforcement learning and physics-based methods have helped create physically plausible and responsive motion, which is useful for applications like robotics and virtual avatars. Human motion generation research spans across computer vision, computer graphics, and robotics. However, many researchers and developers may not be familiar with the latest advances and challenges in this area. This tutorial will provide an introduction to human motion generation, covering key methods, recent developments, and practical applications. We will also discuss open research problems and opportunities for future work. The tutorial will cover the following topics: 1) human motion generation basics, 2) kinematic-based generation methods, 3) physics-based generation methods, 4) controllability of human motion generation, 5) human-object/human/scene interactions, 6) co-speech gesture synthesis, and 7) open research problems.

Services

Tutorial Organizer: 3D Human Motion Generation and Simulation Tutorial, ICCV 2025.

Reviewer:

- Learning:ICLR; NeurIPS; AAAI; Pattern Recognition; Neural Networks; ICONIP; FSDM; MLIS; NeurIPSW.

- Graphics:SIGGRAPH; SIGGRAPH ASIA; ACM TOG; EUROGRAPHICS; TVCG [Link]; PG; GM; CAD (CADJ); GMP; CG; CVM; CGI; SIGGRAPH Poster; Graphics Replicability Stamp.

- Computer Vision:CVPR; ICCV; ECCV; 3DV; BMVC; TIP; TCSVT; TMM; ACM Multimedia; CVPRW; ECCVW.

- Human Behavior Analysis:Sustainable Cities and Society (SCS).

- Robotics:RAL.

- Others:COMPUT J; Scientific (BrainSTEM@HKU).

NeurIPS25W: What Makes a Good Video

(NextVid);

ICCV25W: AI for Visual Arts Workshop and

Challenges (AI4VA).

CVPR25W: 4D Vision Modeling the Dynamic World; Human Motion Generation;

CVPR24W: Generative Models for

Computer Vision; Human Motion Generation;

ECCV24W: Wild3D, AI4VA,

OOD-CV;

Program Committee & Evaluation Committee: CGI 2025; Graphics Replicability Stamp.

Teaching Assistant:- 2023:COMP3271 Computer Graphics. Worked with Prof. Taku Komura.

- 2022:COMP3362 Hands-on AI: Experimentation and Applications. Worked with Dr. Yi-King Choi.

- 2021:COMP3362 Hands-on AI: Experimentation and Applications. Worked with Dr. Yi-King Choi.

- 2020:COMP2120 Computer Organization. Worked with Prof. Kwok-Ping Chan.

Talks (Past and Upcoming):

- Mar. 2026:MOSPA: Human Motion Generation Driven by Spatial Audio, AI/ML and Computer Vision Meetup (Voxel51).

- Jul. 2025:Physics-based Character Animation and Simulation Platform (On behalf of HKU CGVU Lab), ChinaSI.

- Jul. 2025:From Static 3D Geometry to Dynamic 4D Contents: Analysis, Recovery, and Generation, China Society of Image and Graphics.

- Jul. 2025:On Efficient, Controllable, and Physically Plausible Motion Synthesis, University of Science and Technology of China.

- Jul. 2025:Physics-based Motion Control, University of Science and Technology of China.

- Jul. 2025:On Efficient, Controllable, and Physically Plausible Motion Synthesis, Zhejiang University.

- Jun. 2025:From Static 3D Geometry to Dynamic 4D Contents: Analysis, Recovery, and Generation, VALSE.

- May. 2025:Toward Fully Automated 4D Content Creation: Challenges and Future Directions, Stealth Startup.

- May. 2025:Principles and Practices for Efficient, Controllable, and Physically Plausible Motion Synthesis, MiHoYo.

- May. 2025:On Efficient, Controllable, and Physically Plausible Motion Synthesis, The Hong Kong Polytechnic University.

- May. 2025:On Efficient, Controllable, and Physically Plausible Motion Synthesis, Stealth Startup.

- May. 2025:From Static 3D Geometry to Dynamic 4D Contents: Analysis, Recovery, and Generation, EUROGRAPHICS DC.

- Apr. 2025:On Efficient, Controllable, and Physically Plausible Motion Synthesis, Shandong University.

- Apr. 2025:From Static 3D Geometry to Dynamic 4D Contents: Analysis, Recovery, and Generation, BAAI.

- Mar. 2025:Human-Centric Spatial AI for Close Contact Behavior Analysis, Beijing University of Technology.

- Feb. 2025: World Models for Physical Agent Control, Honda Research (GRASP Lab Visit).

- Feb. 2025:On Efficient, Controllable, and Physically Plausible Motion Synthesis, Technion.

- Dec. 2024:On Efficient, Controllable, and Physically Plausible Motion Synthesis, Nvidia.

- Dec. 2024:Towards a Universal Motion Foundation Model, Stealth Startup.

- Oct. 2024:On Efficient, Controllable, and Physically Plausible Motion Synthesis, Meta.

- Oct. 2024:Research Sharing, Shandong University.

- Oct. 2024:Addressing the Challenge of Data Scarcity in Motion Synthesis, Shanghai AI Lab.

- Oct. 2024:On Efficient, Controllable, and Physically Plausible Motion Synthesis, ShanghaiTech University.

- Oct. 2024:On Efficient, Controllable, and Physically Plausible Motion Synthesis, ChinaGraph.

- Aug. 2024:On Efficient, Controllable, and Physically Plausible Motion Synthesis, MiHoYo.

- Apr. 2024:On the Readily Deployable System for Detecting Close Contact Behaviors, Boeing.

- Dec. 2023:Shape Analysis, Recovery and Generation with Geometric and Topological Priors, Stealth Startup.

- Nov. 2023:Geometric Computing - Medial Axis Transform and Normal Orientation for Point Clouds, ShanghaiTech University.

- Oct. 2023:Scalable Skill Embeddings for Physics-based Characters, Tencent Games.

- Jun. 2023:Robust and Efficient Vision Systems for Close Contact Behavior Analysis, Beijing University of Technology.

- Feb. 2023:Scalable Skill Embeddings for Physics-based Characters, Shandong University.

- Oct. 2022:On Efficient Hand-to-Surface Contact Estimation, Boeing.

Awards, Scholarships and Honors

- Mar. 2025:Durlach Graduate Fellowship.

- Feb. 2025:Meshy Fellowship Finalist. [Link]

- Jul. 2024:Top Cited Article in CGF 2022-2023. [Link]

- Jul. 2024:HKU Foundation First Year Excellent Ph.D. Award 2023/24.[Link]

- Oct. 2023:The Best Paper Award, SIGGRAPH 2023. [Link]

- Oct. 2020:Postgraduate Scholarship.

- Oct. 2019:National Scholarship.

- Dec. 2019:Presidential Scholarship.

- Oct. 2018:National Scholarship.

Competitions

- 2019:National First Prize, National Mathematical Modeling Contest.

- 2019:Meritorious Winner, International Mathematical Modeling Contest: The Mathematical Contest in Modeling (MCM).

- 2018:National First Prize, The Best Paper Award (8/38573), National Mathematical Modeling Contest.

- 2018:Meritorious Winner, International Mathematical Modeling Contest: The Interdisciplinary Contest in Modeling (ICM).

- 2018:National Grand Prize (1st), Most Commercially Valuable Award, Most Popular Award; The 11th National University Student Software Innovation Contest.

Miscs.

I used to be:

- a soccer player and a middle-distance runner (substitute for my city in youth sports events; once completed a 1000-meter run in 3 minutes.).

- an electric guitar player (rhythm, solo sometimes). Mainly played Cantonese music from my favorite band: Beyond.

I love Ghost in the Shell[1][2][3][4]. I doubt existence and reality, somewhat and somehow.

"It's better to burn out than to fade away." — Hey Hey, My My (Into the Black) / My My, Hey Hey (Out of the Blue).